Every piece of data you share with an AI system—from your voice commands to uploaded photos—begins a journey that raises critical questions: How long does it stay? Can it truly be deleted? What happens when AI learns from information it shouldn’t keep?

The data lifecycle in artificial intelligence systems operates differently from traditional databases. When you delete a photo from your phone, it’s gone. When an AI system trained on millions of images needs to “forget” specific pictures, the challenge becomes exponentially more complex. That training has already shaped the model’s neural pathways, making simple deletion impossible.

This complexity matters now more than ever. The European Union’s General Data Protection Regulation demands a “right to be forgotten,” California’s Consumer Privacy Act gives residents deletion rights, and companies face growing pressure to prove their AI systems can actually comply. Yet most AI models weren’t designed with forgetting in mind—they were built to remember, learn, and improve through accumulated knowledge.

Consider a healthcare AI trained on patient records. When a patient withdraws consent, hospitals can’t simply remove database entries. The AI’s predictions and patterns already incorporate that information, potentially affecting diagnoses for future patients. The technical challenge of “unlearning” specific data points while maintaining model accuracy represents one of AI’s most pressing ethical and practical problems.

Understanding the AI data lifecycle—from collection through retention, deletion, and the emerging field of machine unlearning—isn’t just academic. Whether you’re an individual concerned about digital privacy, a professional implementing AI systems, or simply curious about how these technologies work, knowing what happens to data inside AI systems empowers better decisions about the technology shaping our future.

What Makes AI Data Different From Regular Data

When you save a photo on your phone or store a document in the cloud, that data exists as a distinct, separate file. Delete it, and it’s gone (mostly). But AI systems interact with data in a fundamentally different way that makes deletion far more complicated.

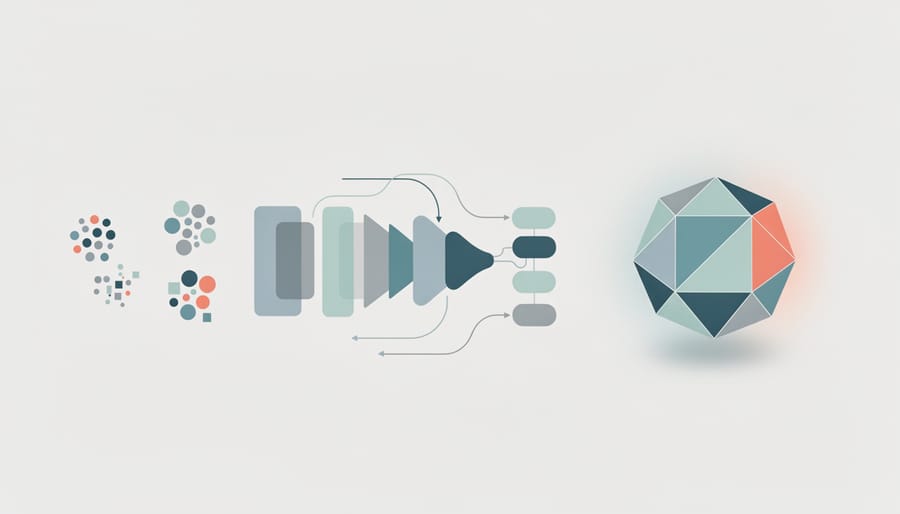

Think of it like baking a cake. Once you’ve mixed the eggs, flour, sugar, and butter together and baked them, you can’t simply remove the eggs afterward. The ingredients have transformed into something entirely new. This is similar to how AI models learn from data. When an AI system trains on thousands or millions of data points, it doesn’t store each piece individually like files in a folder. Instead, it extracts patterns, relationships, and statistical properties, weaving them into the mathematical fabric of the model itself.

This process, called machine learning, creates what we call “learned representations.” Imagine teaching someone to recognize cats by showing them thousands of cat photos. Eventually, they develop an internal understanding of what makes a cat a cat—pointy ears, whiskers, certain body shapes. Even if you destroy all the original photos, that knowledge remains embedded in their mind. AI models work similarly, encoding information from training data into billions of numerical parameters.

Here’s where it gets tricky: a single data point might influence hundreds or thousands of these parameters, while each parameter might be influenced by countless data points. This interconnected web means you can’t simply point to one part of the model and say “this is where Jane’s data lives.” It’s distributed throughout the entire system, mixed with insights from everyone else’s data.

This transformation is what makes AI data different from regular data storage. You’re not dealing with files sitting in neat folders waiting to be deleted. You’re dealing with information that’s been fundamentally transformed and integrated into a complex mathematical structure. Understanding this distinction is crucial for anyone concerned about data privacy, compliance requirements, or simply wondering what really happens to their information once it enters an AI system.

The Five Stages of AI Data Life

Collection and Ingestion: Where Your Data Enters the System

Think of data collection as the front door of an AI system. Every time you interact with a chatbot, upload a photo, or use a voice assistant, your data enters through various entry points: APIs, web forms, sensors, or direct uploads. During this initial stage, the system performs several crucial tasks that affect data lifecycle management.

When data first arrives, it typically undergoes preprocessing: cleaning errors, standardizing formats, and adding metadata like timestamps and source identifiers. For example, when you upload a photo to a social media platform, the system might extract information about location, time, and even the objects within the image. This metadata becomes deeply embedded in the system’s architecture.

Here’s what matters for deletion: during ingestion, your data often gets copied to multiple locations for backup and processing. A single email you send might be stored in production databases, backup servers, and logging systems simultaneously. The system may also create derived data, like extracting your writing style patterns or preferences, which becomes separate from your original input.

This multiplication effect is why deletion becomes challenging later. Understanding where data enters and how it initially spreads throughout the system is essential for anyone concerned about data privacy and the right to be forgotten.

Training and Learning: When Data Becomes Knowledge

Imagine teaching a child to recognize cats. You show them hundreds of pictures, and gradually, their brain forms an internal understanding—whiskers, pointy ears, fur patterns. They’re no longer memorizing individual photos; they’ve extracted the essence of “catness.” Machine learning models work similarly, but with a crucial difference: the transformation is far more permanent.

When you feed training data into an AI model, something remarkable happens. The raw information—images, text, or numbers—gets compressed into mathematical patterns called weights and parameters. Think of it like pressing flowers into a book. The original flower changes form entirely, becoming part of the pages themselves. Through thousands of iterations, the model adjusts these numerical values, encoding relationships and patterns it discovers in your data.

This is where data lineage tracking becomes essential—understanding how specific data points influenced the final model. Here’s the challenge: once training completes, you can’t simply point to where “John’s photo” lives inside the model. That photo has been mathematically blended with millions of others, transformed into countless tiny numerical adjustments spread across the entire neural network.

It’s like trying to remove eggs from a baked cake. The eggs don’t exist as eggs anymore—they’ve fundamentally changed the cake’s structure. Similarly, your data doesn’t sit in the model as retrievable files; it has become the model itself, woven into its very fabric through irreversible mathematical transformations.

Active Use: Your Data at Work

Once your AI model completes training, it enters its most productive phase: making real-world predictions and decisions. Think of it like a graduate who’s finished medical school and now practices independently. The model has internalized patterns from its training data and no longer needs constant access to the original information.

For example, a fraud detection system at your bank doesn’t store details of every past transaction it learned from. Instead, it recognizes suspicious patterns: unusual spending locations, atypical purchase amounts, or odd timing. When you swipe your card in a foreign country, the model quickly evaluates whether this matches your typical behavior, all without retrieving your complete transaction history.

Similarly, recommendation engines on streaming platforms suggest shows based on learned preferences, not by continuously analyzing every viewing choice you’ve ever made. The model has already extracted the essential patterns: “Users who enjoy mystery dramas often watch psychological thrillers.”

This separation between training data and active use raises an important question: if the model no longer directly accesses original data, how do we ensure outdated or incorrect information doesn’t persist in its decision-making? Understanding this challenge becomes crucial as we explore data retention and removal.

Retention: How Long AI Systems Keep Your Data

How long should AI systems hold onto your data? It’s a delicate balancing act. Companies must juggle multiple considerations: legal requirements like GDPR that mandate data minimization, business needs for improving models, and your right to privacy.

Think of it like your email inbox. You could keep every message forever, but that creates clutter and potential security risks. Similarly, AI systems need clear retention policies that specify exactly how long different types of data stay in their systems.

Under GDPR, companies can only keep your data as long as necessary for specific purposes. A chatbot improving its language skills might retain conversations for six months, while a medical AI analyzing diagnostic patterns could justify longer retention for safety validation.

The challenge? Better AI models often benefit from more historical data, but this conflicts with data minimization principles. Progressive companies are finding middle ground by using techniques like data anonymization, where identifying details are removed after initial training, or setting automatic deletion schedules based on data sensitivity. Some retain only aggregated insights rather than raw personal information, keeping what improves their AI while respecting your privacy.

Deletion and Unlearning: The Complex Final Stage

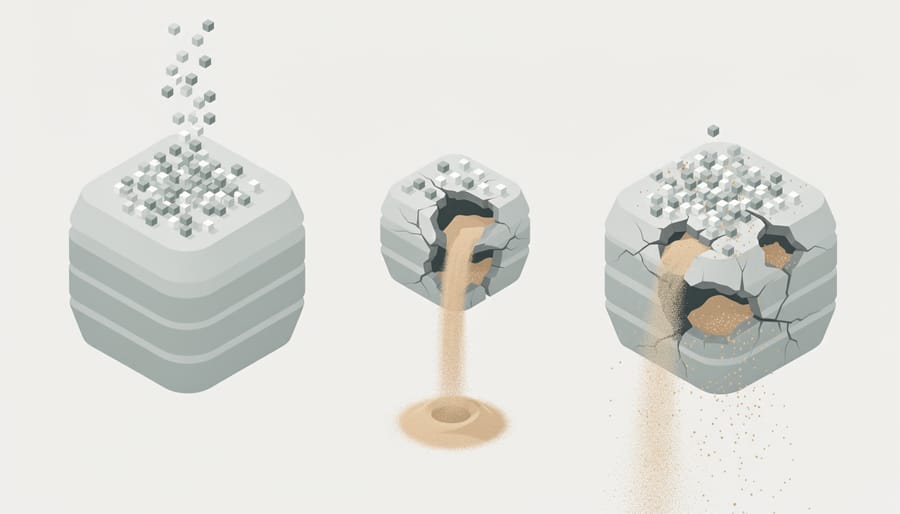

Deleting data from a database is straightforward, like erasing words from a whiteboard. But what happens when an AI model has already learned from that data? Here’s where things get tricky. Even after removing the original information, the model retains patterns and insights derived from it, embedded within millions of neural connections.

Consider a real-world scenario: a person requests their medical records be deleted from a healthcare AI system. While the hospital can erase the stored files, the diagnostic model may have already learned patterns from that patient’s case. Traditional deletion doesn’t make the model forget.

This challenge has sparked the development of machine unlearning, an emerging field focused on selectively removing learned information from trained models. Think of it as targeted amnesia for AI systems. Researchers are exploring techniques that can identify and eliminate specific influences without requiring complete model retraining, which would be computationally expensive and impractical.

Machine unlearning represents a critical evolution in responsible AI development, bridging the gap between data privacy rights and the persistent nature of machine learning.

The Unlearning Challenge: Teaching AI to Forget

Why Simple Deletion Doesn’t Work

When you delete a photo from your phone, it’s gone. But when you delete training data from an AI model, the story is completely different. Here’s why: AI models learn patterns, not individual data points.

Imagine teaching a student about birds by showing them thousands of photos. Even if you later take away some of those photos, the student still remembers what makes a bird a bird—the wings, feathers, and beaks they observed. AI models work the same way. During training, they extract patterns and statistical relationships from data, encoding this knowledge into millions of mathematical parameters called weights.

Consider a real-world scenario: a facial recognition system trained on thousands of faces, including yours. If the company deletes your original photo from their database, your facial features have already influenced the model’s understanding of human faces. The model has learned subtle patterns about eye spacing, nose shapes, and facial proportions that include characteristics from your image. Simply removing the source photo doesn’t erase this learned knowledge.

This creates a significant challenge for privacy rights like the “right to be forgotten” under regulations such as GDPR. Traditional data deletion methods are ineffective because the model itself becomes a persistent memory of the training data, requiring specialized techniques called machine unlearning to truly remove data’s influence.

Current Unlearning Techniques That Actually Work

So how do we actually make AI systems forget? While perfect unlearning remains challenging, several practical approaches have emerged that balance effectiveness with computational feasibility.

The most straightforward method is complete model retraining. Think of it like rebuilding a house from scratch—you simply train a new AI model from the ground up, excluding the data you want removed. This guarantees the unwanted information is truly gone, but here’s the catch: retraining large language models or complex neural networks can cost thousands of dollars in computing power and take weeks to complete. For companies running massive AI systems, doing this every time someone requests data deletion isn’t realistic.

That’s where data influence reduction techniques come in. These methods work like surgical strikes rather than complete demolitions. One popular approach, called SISA (Sharded, Isolated, Sliced, and Aggregated) training, divides your dataset into smaller chunks during initial training. When you need to remove specific data, you only retrain the affected chunk instead of the entire model. Imagine organizing a massive library into separate rooms—if you need to remove books from one room, you don’t reorganize the entire building.

Approximate unlearning methods offer an even faster alternative. These techniques modify the existing model’s parameters to minimize the influence of specific data points, like carefully editing a painting rather than starting over. Methods such as gradient ascent (essentially reversing the learning process for specific data) or using influence functions (which identify and counteract how particular data shaped the model) can work in minutes rather than days.

The trade-off? Approximate methods don’t guarantee complete removal—they reduce influence rather than eliminate it entirely. For many practical applications, though, this compromise between speed and thoroughness strikes the right balance, especially when dealing with frequent deletion requests under privacy regulations.

What This Means for You: Privacy in the Age of AI

As AI systems become woven into the fabric of our daily lives—from the chatbots we consult for advice to the recommendation engines that shape our content feeds—understanding your data rights has never been more critical. The good news? You have more control than you might think.

For everyday users, the first step is recognizing that you’re not powerless in the AI data ecosystem. In many jurisdictions, including the European Union under GDPR and California under CCPA, you have the legal right to access the personal information AI companies hold about you. You can request to see what data was collected, how it’s being used, and in some cases, demand its deletion. But here’s the catch: deletion doesn’t always mean the AI forgets what it learned from your data. This is where asking the right questions becomes essential.

When engaging with AI services, consider asking companies: Do you support machine unlearning? How long is my data retained? Can you demonstrate that my information has been removed from both storage and model training? What happens to my data if I delete my account? These questions aren’t just theoretical—they’re practical tools for informed decision-making.

For businesses, the implications cut deeper. Implementing robust data governance practices isn’t optional anymore; it’s a competitive advantage. Customers increasingly choose services that respect their privacy, and regulatory fines for non-compliance can reach millions. Companies should conduct regular data audits, establish clear retention policies, and invest in unlearning capabilities before they’re forced to by regulation or reputation damage.

Think of your data-sharing decisions as investments. Before handing over information to an AI system, ask yourself: Is the value I receive worth the data I’m sharing? Can I achieve the same result with less sensitive information? Would I be comfortable if this data was used to train models indefinitely?

The age of AI doesn’t mean surrendering privacy—it means becoming a more informed participant. By understanding the data lifecycle and exercising your rights, you transform from a passive data source into an active stakeholder in the AI ecosystem.

The Future of AI Memory Management

The landscape of AI memory management is evolving rapidly, driven by both technological innovation and growing regulatory pressure. As we look ahead, several key trends are reshaping how AI systems will handle data throughout its lifecycle.

Regulations like the European Union’s AI Act and expanding privacy laws worldwide are establishing stricter requirements for data retention and deletion. These frameworks mandate that AI developers implement robust mechanisms for removing personal information upon request, making machine unlearning no longer optional but essential. Companies that fail to adapt risk substantial fines and reputational damage.

On the technical front, researchers are developing privacy-preserving AI techniques that promise to revolutionize data handling. Federated learning, for instance, allows AI models to learn from distributed datasets without centralizing sensitive information. Imagine a healthcare AI that improves by learning from patient data across multiple hospitals, yet never actually sees or stores individual medical records. Differential privacy adds mathematical noise to data, protecting individual identities while maintaining overall accuracy for insights.

Homomorphic encryption takes this further by enabling AI systems to process encrypted data without ever decrypting it. Think of it as a secure box where calculations happen inside, and only results emerge, keeping raw data perpetually protected.

The challenge ahead lies in balancing innovation with individual rights. While these technologies offer promising solutions, they often come with performance trade-offs or increased computational costs. The future will likely see hybrid approaches combining multiple techniques, creating AI systems that are both powerful and respectful of privacy. For individuals, this means greater control over personal data. For organizations, it means building trust while maintaining competitive advantage in an increasingly regulated environment.

Understanding the AI data lifecycle isn’t just about technology—it’s about recognizing your place in an increasingly AI-driven world. Every time your data trains a model, influences a recommendation, or shapes an algorithm’s behavior, you’re contributing to systems that will affect millions of others. That’s why knowing how your information is collected, stored, retained, and potentially deleted matters more than ever.

The key takeaways are straightforward but powerful. First, remember that your data doesn’t simply disappear when you delete an account or request removal. It may persist in AI models through learned patterns, making machine unlearning an essential emerging practice. Second, you have rights—regulations like GDPR and CCPA exist to protect you, though enforcement and implementation vary widely. Third, transparency is your ally. Don’t hesitate to ask companies: How long do you keep my data? What happens when I request deletion? Can your AI systems actually forget what they learned from my information?

As AI technology evolves, so too will the relationship between privacy and innovation. We’re moving toward a future where responsible data stewardship isn’t optional but expected, where machine unlearning becomes standard practice, and where individuals have genuine control over their digital footprints. By staying informed and asking the right questions, you’re not just protecting yourself—you’re helping shape a more accountable AI ecosystem for everyone. The conversation about AI and privacy is far from over; it’s only just beginning.