In an era where AI systems increasingly drive critical decisions, model robustness stands as the cornerstone of reliable artificial intelligence. When a machine learning model performs brilliantly in controlled environments but fails spectacularly in real-world scenarios, we’re witnessing the consequences of poor robustness. This vulnerability to unexpected inputs, environmental changes, and adversarial attacks has become one of the most pressing challenges in modern AI development.

Consider a self-driving car that perfectly navigates sunny conditions but struggles during rainfall, or a facial recognition system that works flawlessly in lab tests but fails to account for different lighting conditions in practical applications. These examples highlight why robustness isn’t just a technical metric—it’s a fundamental requirement for deploying AI systems that people can trust.

The quest for robust models extends beyond mere accuracy scores. It demands a comprehensive approach that considers model stability across different scenarios, resistance to adversarial examples, and consistent performance under varying conditions. As AI systems become more integrated into critical infrastructure, healthcare, and financial systems, the importance of building robust models that can maintain reliable performance in unpredictable real-world environments has never been more crucial.

What Makes an AI Model Robust?

Stability Under Pressure

Imagine a skilled surfer who maintains balance even when riding through turbulent waves. Similarly, robust AI models demonstrate remarkable stability when faced with data variations and unexpected disturbances. This stability under pressure is crucial for real-world applications where data rarely matches perfect training conditions.

Robust models achieve this stability through several key mechanisms. First, they employ data augmentation techniques during training, exposing the model to various data perturbations and noise patterns. This is like training an athlete under different weather conditions to perform consistently regardless of the environment.

Additionally, robust models utilize advanced regularization methods that prevent over-reliance on specific data features. Think of it as teaching the model to focus on fundamental patterns rather than superficial details that might change. For instance, a robust image recognition system should correctly identify a car whether the photo is slightly blurry, taken at night, or partially obscured by rain.

Modern robust models also incorporate uncertainty estimation, allowing them to maintain reliable performance by acknowledging when they’re operating in unfamiliar territory. This self-awareness helps prevent catastrophic failures and maintains consistent performance across varying conditions.

Generalization Power

Generalization power is a crucial aspect of model robustness that determines how well an AI system performs when faced with new, unseen data. Think of it like teaching a child to recognize dogs – they shouldn’t just memorize the family pet but should be able to identify different breeds, sizes, and colors of dogs they’ve never seen before.

A robust model should maintain consistent performance across various scenarios, even when encountering data that differs from what it was trained on. This capability is developed through an effective model training framework that exposes the model to diverse examples and prevents overfitting.

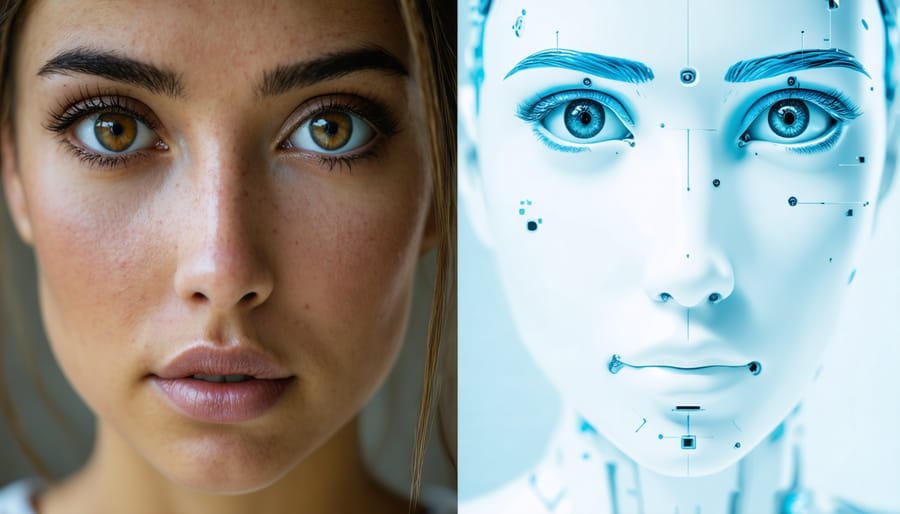

For instance, a facial recognition system should work reliably regardless of lighting conditions, angles, or whether the person is wearing glasses. Similarly, a natural language processing model should understand various accents, dialects, and writing styles. Strong generalization power ensures that AI systems remain reliable and useful in real-world applications, where conditions are often unpredictable and constantly changing.

To achieve this, models must be trained on diverse, high-quality datasets and regularly evaluated using validation sets that represent different scenarios they might encounter in practice.

Common Threats to Model Robustness

Adversarial Attacks

Adversarial attacks represent one of the most fascinating challenges in AI security, where subtle changes to input data can cause sophisticated models to make dramatic mistakes. Imagine slightly modifying a stop sign image in a way that’s barely noticeable to humans, yet causes an autonomous vehicle’s AI to interpret it as a speed limit sign – this is a classic example of an adversarial attack.

These attacks work by exploiting the vulnerabilities in how AI models process input data. By adding carefully calculated perturbations – small changes to the input – attackers can manipulate the model’s output in predictable ways. Common techniques include the Fast Gradient Sign Method (FGSM), which adds small distortions in the direction that maximizes the model’s error, and the Carlini & Wagner attack, which creates highly sophisticated adversarial examples.

The implications are significant across various domains. In facial recognition systems, wearing specially designed glasses could prevent identification. In medical imaging, subtle modifications to X-rays could lead to misdiagnosis. Even voice recognition systems can be fooled by adding nearly imperceptible noise to audio inputs.

Understanding these vulnerabilities is crucial for developing more robust AI systems. Researchers and developers are actively working on defensive techniques like adversarial training, where models are explicitly trained on adversarial examples, and input preprocessing methods that can detect and neutralize potential attacks before they reach the model.

Distribution Shifts

Distribution shifts occur when the data patterns your model encounters in the real world differ significantly from the training data. Imagine training a self-driving car system using only daytime footage and then expecting it to perform equally well at night – this is a classic example of a distribution shift that can severely impact model performance.

These shifts come in various forms. Temporal shifts happen when data patterns change over time, like social media trends or consumer behaviors evolving. Geographic shifts occur when a model trained in one location is deployed in another with different characteristics. Technical shifts can emerge from changes in data collection methods or sensor upgrades.

The challenge is particularly relevant in critical applications. A medical diagnosis model trained primarily on data from one demographic group might perform poorly when used with patients from different backgrounds. Similarly, facial recognition systems trained mainly on certain ethnicities might show reduced accuracy when encountering diverse populations.

To address distribution shifts, practitioners employ several strategies. Regular model retraining with updated data helps adapt to new patterns. Robust testing across diverse scenarios can identify potential weaknesses before deployment. Some advanced techniques, like domain adaptation and invariant learning, specifically target this challenge by helping models learn features that remain stable across different distributions.

Understanding and preparing for distribution shifts is crucial for building reliable AI systems that can maintain their performance in dynamic real-world conditions.

Testing Model Robustness

Stress Testing Methods

Evaluating model robustness requires systematic stress testing through various methods designed to challenge and assess AI systems under different conditions. One fundamental approach is real-world performance testing, where models are exposed to actual deployment scenarios with unpredictable inputs and varying environmental factors.

Adversarial testing is another crucial method, involving the deliberate introduction of slightly modified inputs designed to trick the model. For example, adding subtle noise to images or changing a few words in text can reveal vulnerabilities in seemingly robust systems.

Data augmentation testing helps assess how models handle variations in input data. This includes testing with rotated or scaled images, text with different writing styles, or audio with background noise. By systematically introducing these variations, developers can identify weak points in their models’ performance.

Cross-validation testing splits the data into multiple subsets, training and testing the model on different combinations to ensure consistent performance across various data distributions. This helps identify if the model is overly dependent on specific data patterns.

Edge case testing focuses on unusual or extreme scenarios that might occur rarely but could be critical for the model’s reliability. This includes testing with outlier data, boundary conditions, and unexpected input combinations that might challenge the model’s decision-making capabilities.

Key Metrics and Benchmarks

When evaluating model robustness, several key metrics and benchmarks help us understand how well our AI models perform under various conditions. One fundamental metric is accuracy under perturbation, which measures how well a model maintains its performance when input data is slightly modified or corrupted.

Error rate on adversarial examples is another crucial benchmark, indicating the percentage of instances where the model fails when faced with deliberately crafted inputs designed to fool it. A robust model should maintain relatively stable performance even when processing these challenging cases.

Cross-domain generalization serves as a vital metric, measuring how effectively a model performs on data from different sources or contexts than its training data. For instance, an image recognition model trained on daytime photos should ideally maintain reasonable accuracy when processing nighttime images.

Stability scores quantify how consistently a model produces the same outputs for similar inputs. A lower variance in predictions for slightly different versions of the same input indicates better robustness. Engineers often use the Lipschitz constant as a mathematical measure of this stability.

For real-world applications, metrics like performance under noise and environmental stress testing are essential. These measure how well models handle common real-world interference such as sensor noise, lighting changes, or weather conditions. Successful models typically maintain at least 80% of their baseline performance under such variations.

Modern benchmarks also include fairness metrics, ensuring models remain robust across different demographic groups and don’t show significant performance disparities based on sensitive attributes.

Building Robust AI Models

Data Augmentation Techniques

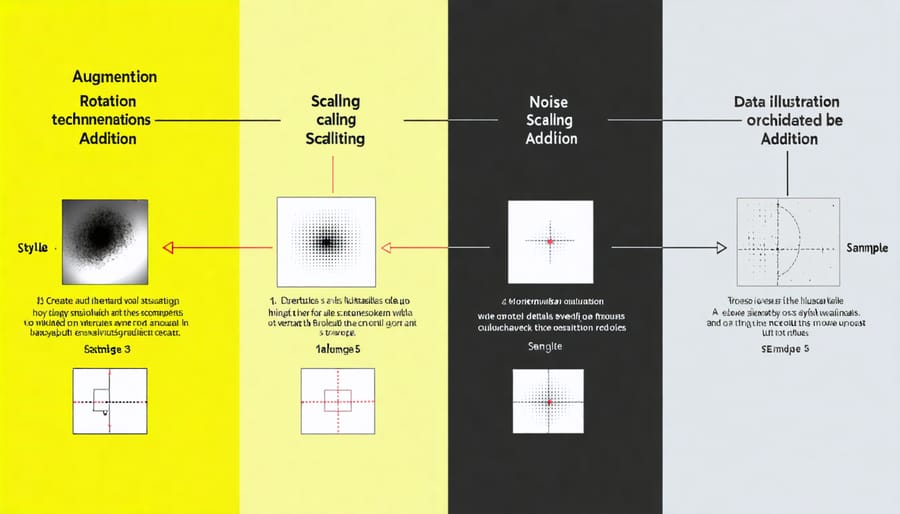

Data augmentation is a powerful strategy that helps make AI models more robust by artificially expanding the training dataset. Think of it as teaching a model to recognize a cat not just from perfect, front-facing photos, but also from different angles, lighting conditions, and scenarios.

Common image augmentation techniques include rotation, flipping, scaling, and adding noise to existing images. For instance, a single photo of a stop sign can be transformed into dozens of variations by slightly rotating it, changing its brightness, or adding weather effects like rain or snow. This helps the model learn to recognize stop signs in various real-world conditions.

For text data, augmentation might involve synonym replacement, back-translation (translating text to another language and back), or random insertion and deletion of words. These techniques help models understand the same meaning expressed in different ways. For example, “The movie was great” and “The film was excellent” should be recognized as expressing similar sentiments.

Audio augmentation techniques include adding background noise, changing pitch or speed, and time-shifting. This helps voice recognition systems work reliably in noisy environments or with different speaking styles.

When implementing data augmentation, it’s important to maintain realistic transformations. Over-aggressive augmentation can create unrealistic examples that might harm model performance. The key is to create variations that reflect real-world scenarios your model might encounter.

Modern deep learning frameworks offer built-in augmentation tools, making it easier to implement these techniques. By using data augmentation strategically, you can build models that perform consistently across different conditions and are more resistant to adversarial attacks.

Defensive Training Strategies

Developing robust AI models requires implementing specialized training strategies that go beyond traditional approaches. One of the most effective methods is adversarial training, where models are deliberately exposed to challenging examples during the training phase. This process helps them develop resistance against potential attacks and unexpected inputs.

Data augmentation plays a crucial role in defensive training by introducing controlled variations in the training data. By applying transformations like rotation, scaling, and adding noise, models learn to handle diverse scenarios more effectively. This approach is particularly valuable when combined with advanced model tuning techniques to optimize performance across different conditions.

Ensemble learning represents another powerful defensive strategy, where multiple models work together to make predictions. This approach reduces the impact of individual model vulnerabilities and increases overall system reliability. When implementing ensemble methods, it’s essential to maintain alignment with ethical AI development practices to ensure responsible deployment.

Regular validation against diverse test sets helps identify potential weaknesses early in the training process. This includes testing against both natural variations and artificially generated challenging cases. Implementing early stopping mechanisms and careful monitoring of validation metrics prevents overfitting while maintaining model robustness.

Incorporating regularization techniques like dropout and weight decay helps create more generalizable models that perform reliably across different scenarios. These methods work by preventing the model from becoming too dependent on specific features or patterns in the training data, ultimately leading to better real-world performance.

Model robustness remains a critical challenge in the evolving landscape of artificial intelligence and machine learning. As we’ve explored throughout this article, creating reliable and resilient AI models requires a multi-faceted approach combining theoretical understanding with practical implementation strategies.

The key takeaways emphasize the importance of comprehensive testing across diverse scenarios, careful consideration of edge cases, and regular evaluation of model performance under various conditions. Techniques such as data augmentation, adversarial training, and ensemble methods have proven effective in enhancing model robustness, but they must be applied thoughtfully based on specific use cases and requirements.

Looking ahead, several areas deserve attention from researchers and practitioners. The growing complexity of AI systems demands more sophisticated robustness evaluation methods. Additionally, as AI applications expand into critical domains like healthcare and autonomous vehicles, the need for standardized robustness metrics and certification procedures becomes increasingly important.

Future considerations should focus on developing more efficient testing methodologies, improving interpretability of robust models, and creating frameworks that can automatically adapt to new types of adversarial attacks. The field must also address the balance between model robustness and computational efficiency, as robust models often require significant computational resources.

Success in achieving model robustness will ultimately depend on continued collaboration between researchers, practitioners, and industry stakeholders, along with a commitment to establishing best practices and standards for robust AI systems.