In the ever-evolving landscape of data analysis and model reliability, robust z-scores emerge as a critical tool for handling outliers and ensuring statistical integrity. Unlike traditional z-scores, which can be severely skewed by extreme values, robust z-scores leverage the median and median absolute deviation (MAD) to provide a more dependable measure of data dispersion. This modification proves particularly valuable in AI systems where outlier detection directly impacts model performance and security.

Consider a fraud detection system processing millions of transactions daily: while standard z-scores might flag legitimate but unusual transactions, robust z-scores maintain their effectiveness by resisting the influence of extreme outliers. This resilience makes them invaluable for real-world applications where data anomalies are common and costly mistakes must be avoided.

By incorporating robust z-scores into your analysis pipeline, you gain a more reliable metric that maintains its statistical power even when confronted with heavily skewed or contaminated datasets. This approach has become increasingly crucial as modern AI systems face growing demands for accuracy and reliability in challenging, real-world environments.

Understanding Robust Z-Scores in AI Security

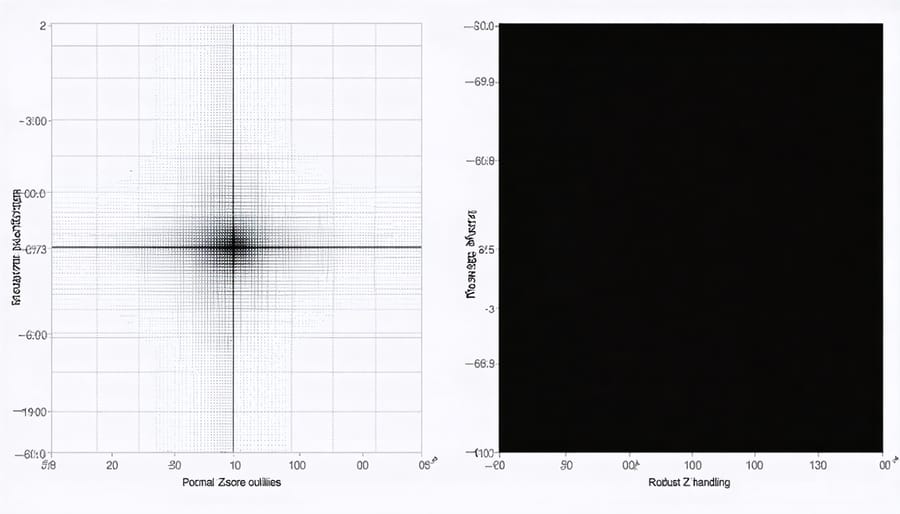

Traditional vs. Robust Z-Scores

While traditional z-scores rely on mean and standard deviation calculations, robust z-scores take a different approach by using median and median absolute deviation (MAD). This distinction becomes crucial when evaluating AI model performance, especially in datasets containing outliers or anomalies.

Traditional z-scores can be misleading when your data isn’t perfectly normal or contains extreme values, as both mean and standard deviation are sensitive to outliers. In contrast, robust z-scores maintain their reliability even with skewed or noisy data, making them particularly valuable for AI applications dealing with real-world datasets.

Consider a fraud detection model: traditional z-scores might miss subtle anomalies because legitimate but unusual transactions can skew the mean. Robust z-scores, however, remain stable and more accurately identify truly suspicious patterns. This resilience to outliers makes robust z-scores especially useful in security applications, automated quality control, and anomaly detection systems where accuracy in identifying deviations is crucial.

The Mathematics Made Simple

The robust z-score calculation takes a refreshingly simple approach to identifying outliers. Instead of using the mean and standard deviation, which can be skewed by extreme values, it relies on the median and Median Absolute Deviation (MAD).

Here’s how it works: First, find the median of your dataset. Then, calculate how far each data point is from this median. Next, find the median of these distances – that’s your MAD. Finally, divide each point’s distance from the median by the MAD (multiplied by a constant factor of 1.4826).

Think of it like measuring how unusual someone’s height is in a crowd. Rather than using the average height (which could be thrown off by a few extremely tall people), you use the middle height (median) as your reference point. The MAD tells you what a typical difference from this middle height looks like.

The formula looks like this:

Robust Z-score = (x – median) / (1.4826 × MAD)

This approach gives you a more reliable way to spot genuine outliers, especially when your data isn’t perfectly normal or contains extreme values.

Practical Applications in AI Model Security

Detecting Adversarial Attacks

In the ever-evolving landscape of AI security, robust z-scores have emerged as a powerful tool for detecting adversarial attacks on machine learning models. These attacks attempt to fool AI systems by introducing subtle manipulations to input data, making them particularly challenging to identify using traditional methods.

Robust z-scores excel at spotting anomalies that might indicate an attack because they’re less influenced by extreme values that attackers often exploit. For example, in image classification systems, an adversarial attack might slightly alter pixel values to make a stop sign appear as a speed limit sign to an AI model. By calculating robust z-scores for various features of the input data, security systems can flag suspicious deviations that might otherwise go unnoticed.

The method is particularly effective because it establishes a baseline of normal behavior using median and MAD (Median Absolute Deviation) instead of mean and standard deviation. This makes it much harder for attackers to hide their manipulations within what appears to be normal statistical variation.

In practice, security teams implement robust z-score monitoring in real-time, setting threshold values that trigger alerts when scores exceed certain limits. This creates an early warning system that can detect potential attacks before they cause significant damage, allowing for prompt investigation and response to security threats.

Model Performance Monitoring

Robust z-scores play a crucial role in real-time model monitoring, helping data scientists and AI engineers maintain the reliability of their machine learning systems. By continuously calculating robust z-scores for model outputs and performance metrics, teams can quickly identify when their models start behaving unusually or degrading in quality.

Think of robust z-scores as vigilant security guards for your AI models. They watch for subtle shifts in performance that might indicate problems like data drift, concept drift, or model staleness. For example, if your recommendation system suddenly starts generating predictions with unusually high confidence scores, robust z-scores can flag this behavior before it impacts user experience.

To implement effective monitoring, consider tracking metrics like:

– Prediction confidence scores

– Error rates and accuracy measures

– Processing time and resource usage

– Input data distribution characteristics

When any of these metrics exceeds predetermined robust z-score thresholds (typically ±3), automated alerts can notify the team to investigate. This proactive approach helps maintain model health and prevents degradation of service quality.

Remember to periodically review and adjust your monitoring thresholds based on your specific use case and risk tolerance. What constitutes an “unusual” deviation in one context might be perfectly normal in another.

Implementation Best Practices

Setting Appropriate Thresholds

Determining appropriate thresholds for robust z-scores requires careful consideration of your specific use case and data characteristics. While the traditional z-score often uses ±3 as cutoff points, robust z-scores may require different thresholds due to their resistance to outliers.

A common starting point is to use ±2.5 for moderate outlier detection and ±3.5 for extreme outliers. However, these values should be adjusted based on your domain knowledge and tolerance for false positives versus false negatives. For example, in fraud detection systems, you might want to lower the threshold to ±2.0 to catch more potential anomalies, accepting some false positives in exchange for better security.

To fine-tune your thresholds:

1. Start with a conservative threshold (±3.5)

2. Analyze the distribution of your robust z-scores

3. Calculate the percentage of data points flagged as outliers

4. Adjust based on domain expertise and cost of misclassification

For time-series data, consider using dynamic thresholds that adapt to seasonal patterns. In financial applications, practitioners often use different thresholds for different market conditions, perhaps tightening them during periods of high volatility.

Remember that threshold selection is an iterative process. Monitor your system’s performance and adjust thresholds based on feedback and real-world results. Consider using cross-validation techniques to validate your chosen thresholds across different subsets of your data.

Integration with Existing Systems

Integrating robust z-scores into existing AI security systems requires a systematic approach that minimizes disruption while maximizing security benefits. Start by identifying the critical monitoring points in your current system where anomaly detection is essential. These typically include input validation, user behavior analysis, and output verification stages.

Create a parallel processing pipeline that calculates robust z-scores alongside your existing metrics. This allows for comparison and validation before fully transitioning to the new system. Implement a configuration layer that enables easy switching between traditional and robust z-score methods, making it simple to roll back changes if needed.

For real-time systems, optimize the calculation process by maintaining running medians and MAD values in memory, updating them periodically rather than recalculating from scratch. Consider using sliding windows of recent data to maintain computational efficiency while ensuring responsiveness to new threats.

Add logging mechanisms specifically for robust z-score calculations to track their effectiveness in identifying anomalies. This helps in fine-tuning thresholds and comparing detection rates with previous methods. Ensure your monitoring dashboard includes visualizations of both traditional and robust z-scores to help security teams understand and trust the new metrics.

Finally, develop clear documentation and training materials for your team, explaining how robust z-scores complement existing security measures and when they should be preferred over traditional approaches.

Common Challenges and Solutions

Handling High-Dimensional Data

When dealing with modern AI and machine learning models, handling complex AI data often involves working with thousands or even millions of dimensions. Applying robust z-scores in these high-dimensional spaces requires special consideration and strategic approaches.

One effective strategy is dimension reduction before calculating robust z-scores. Techniques like Principal Component Analysis (PCA) or t-SNE can help compress the data while preserving important patterns. This not only makes the calculations more manageable but also helps identify outliers that might be hidden in the original high-dimensional space.

Feature grouping is another powerful approach. Instead of treating each dimension independently, related features can be grouped together, and robust z-scores can be calculated for these groups. For example, in an image recognition model, you might group features related to color, texture, and shape separately.

To maintain computational efficiency, consider using batch processing for large datasets. Calculate robust z-scores on smaller batches of data and then combine the results using weighted averaging. This approach is particularly useful when working with streaming data or when memory constraints are a concern.

Remember to validate your results using cross-validation techniques. High-dimensional data can be deceptive, and what appears to be an outlier in one dimension might be perfectly normal when considered in the context of other dimensions. Using multiple validation sets helps ensure your robust z-score calculations remain reliable across different subsets of your data.

For real-time applications, consider implementing adaptive thresholds that automatically adjust based on the data distribution in different dimensions. This helps maintain the effectiveness of outlier detection as your data evolves over time.

Computational Efficiency

When dealing with large datasets, calculating robust z-scores efficiently becomes crucial for maintaining good system performance. Here are several strategies to optimize your implementations:

First, consider using vectorized operations instead of loops when working with libraries like NumPy or Pandas. Vectorization can significantly speed up calculations, especially for large datasets. For example, you can compute the median absolute deviation (MAD) in a single operation rather than iterating through each value.

Memory management is another key consideration. For extremely large datasets, consider processing data in chunks or batches. This approach helps prevent memory overflow issues while maintaining computational efficiency. You can implement a streaming approach where you calculate running medians and MADs as new data arrives.

Parallel processing can also boost performance. Libraries like Dask or PySpark allow you to distribute calculations across multiple cores or machines. This is particularly useful when processing millions of data points or when real-time scoring is required.

For applications requiring frequent updates, consider implementing an incremental calculation method. Instead of recalculating the entire robust z-score each time new data arrives, update only the affected values and their dependencies. This approach works well for streaming data applications or online learning systems.

Caching intermediate results, such as the median and MAD values, can also improve performance when multiple calculations use the same reference data. Just remember to invalidate the cache when the underlying data changes significantly.

Finally, consider using approximate methods for very large-scale applications. While exact calculations are preferred, techniques like reservoir sampling or streaming algorithms can provide good approximations with much lower computational overhead.

Robust z-scores have emerged as a crucial tool in strengthening AI security and model evaluation processes. By providing a more reliable method for detecting outliers and anomalies, they help safeguard AI systems against potential vulnerabilities and data manipulation attempts. The technique’s resistance to extreme values makes it particularly valuable in real-world applications where data quality cannot always be guaranteed.

As AI systems continue to evolve and become more complex, the importance of robust statistical measures will only grow. The ability to accurately identify anomalies while maintaining resilience against statistical pollution positions robust z-scores as an essential component of future AI security frameworks. This is especially relevant in sensitive applications like fraud detection, network security, and autonomous system monitoring.

Looking ahead, we can expect to see increased integration of robust z-scores in automated AI testing pipelines and security protocols. Their simplicity of implementation, combined with their effectiveness, makes them an attractive choice for organizations seeking to enhance their AI systems’ reliability and security. Additionally, as edge computing and IoT devices become more prevalent, robust z-scores may play a vital role in real-time anomaly detection at the device level.

For practitioners and developers, understanding and implementing robust z-scores represents a fundamental step toward building more secure and reliable AI systems. Whether you’re working on small-scale applications or enterprise-level solutions, incorporating this technique can significantly improve your system’s resilience against outliers and potential security threats.