Track every dataset transformation from raw collection through model deployment by implementing automated logging systems that capture data sources, processing steps, and version changes. When a model produces unexpected results six months after launch, this trail becomes your diagnostic roadmap, revealing exactly which data modifications influenced the outcome.

Establish version control for both code and data by treating datasets as first-class artifacts in your development pipeline. Just as GitHub tracks code changes, tools like DVC (Data Version Control) or MLflow maintain snapshots of training data, enabling you to recreate any model version precisely as it existed during development. This practice eliminates the common frustration of unreproducible results when team members ask, “Which dataset did we use for that successful experiment?”

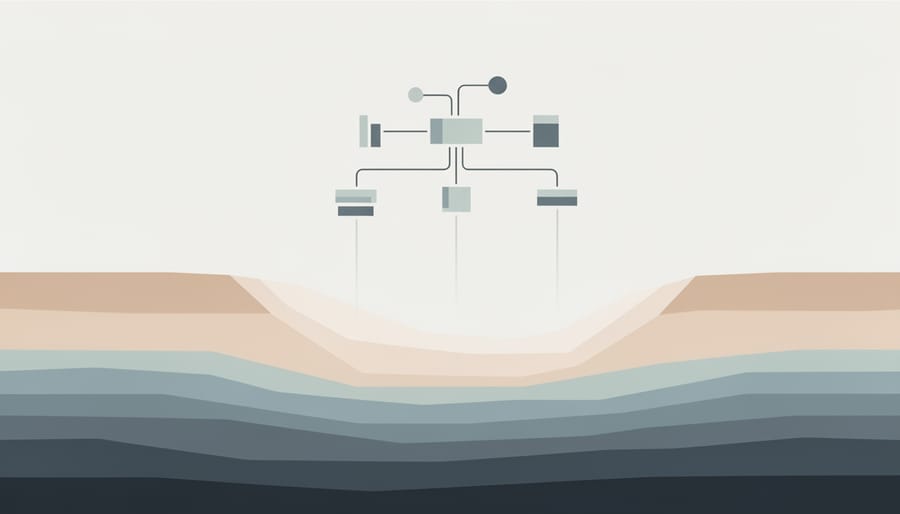

Document data lineage at each lifecycle stage, from initial collection through preprocessing, feature engineering, training, and deployment. Create visual maps showing how raw customer transaction data, for example, flows through cleaning scripts, becomes engineered features, trains multiple model versions, and ultimately powers production predictions. These maps transform abstract data flows into concrete pathways that any team member can understand and audit.

Implement governance frameworks that automatically flag compliance issues before they become problems. When working with regulated data like healthcare records or financial information, lineage tracking ensures you can demonstrate to auditors exactly which data contributed to which decisions. This proactive approach prevents the nightmare scenario of discovering mid-audit that you cannot trace how sensitive information moved through your system.

The consequences of neglecting data lineage extend beyond technical inconvenience. Organizations face regulatory penalties, wasted resources chasing phantom bugs, and eroded trust when models fail mysteriously. Understanding how data provenance integrates into the AI development lifecycle transforms these risks into manageable, systematic processes that strengthen every project phase.

What Is Data Lineage and Provenance in AI?

Imagine buying organic vegetables at your local grocery store. The label tells you exactly which farm grew them, when they were harvested, and how they traveled to reach your basket. This traceability—knowing the complete journey from seed to store—is precisely what data lineage and provenance bring to artificial intelligence.

In the AI world, data lineage is the complete roadmap of your data’s journey. It tracks where data originated, every transformation it underwent, which systems processed it, and ultimately how it fed into your AI model. Think of it as a detailed travel diary for each piece of information flowing through your project.

Data provenance goes even deeper. It’s the historical record that documents not just the path, but the context—who created or modified the data, when changes occurred, why transformations happened, and what tools were used. If lineage is the route your data took, provenance is the story behind every stop along that route.

Why does this matter more in AI than traditional data management? The answer lies in AI’s unique complexity. When a traditional database query produces unexpected results, you can usually trace the issue relatively quickly. But when an AI model makes a wrong prediction, the culprit could be buried anywhere in millions of data points processed months ago.

Without proper tracking, you face serious consequences. A single mislabeled training image could bias your entire model. A data preprocessing error might go undetected until your model fails in production. Regulatory audits become nightmares when you cannot explain how your model reached specific decisions.

Moreover, AI models are living systems that evolve through retraining. Understanding which version of which dataset produced which model version becomes critical for reproducibility. If your model performed brilliantly last month but poorly today, data lineage helps you identify exactly what changed, enabling you to debug effectively and maintain trust in your AI systems.

The AI Development Lifecycle: Where Your Data Story Begins

From Raw Data to Training Sets

Before your AI model can learn anything, it needs quality data—and getting there involves more steps than most people realize. Data collection begins with gathering information from various sources: customer databases, sensors, public datasets, or web scraping. But raw data is messy. It contains duplicates, missing values, inconsistent formats, and errors that can derail your entire project.

The cleaning phase tackles these issues by removing duplicates, handling missing information, and standardizing formats. Then comes preparation: transforming data into features your model can actually use, splitting it into training and testing sets, and sometimes augmenting it to create more examples.

Here’s where things go wrong without proper tracking. Imagine discovering your model performs poorly in production, but you can’t remember which cleaning rules you applied six months ago. Or worse, you accidentally include test data in your training set because you lost track of how you split the original dataset. One data science team spent weeks debugging a model only to discover someone had accidentally filtered out an entire customer segment during cleaning. Without documentation of each transformation, data preparation becomes a black box. When problems arise, you’re left guessing what went wrong instead of tracing back through your steps to find the exact issue.

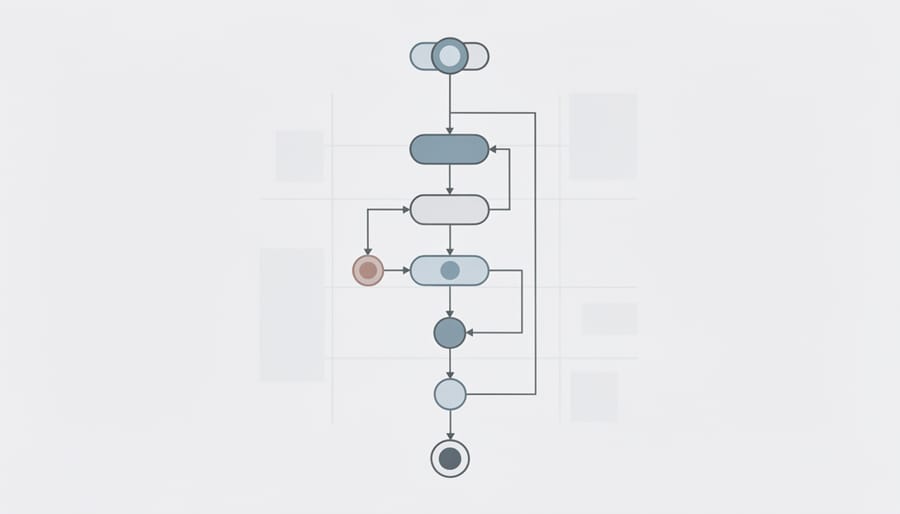

Model Training and Experimentation

Model training is where your AI project transforms from raw ingredients into a finished recipe—but this transformation creates layers of complexity that can haunt you later if left undocumented. During this phase, your data undergoes numerous changes: normalization scales values differently, feature engineering creates new variables, and data augmentation might flip, rotate, or modify images for better learning.

Consider a real example: a healthcare AI team trained a diagnostic model but couldn’t reproduce their results six months later. Why? They hadn’t documented that their training pipeline randomly shuffled data differently each run, applied specific image preprocessing filters, and split datasets using a date-based method rather than random selection.

Every experiment you run—different hyperparameters, architectures, or data subsets—creates a new branch in your model’s family tree. Without proper tracking, you’ll struggle to answer basic questions: Which data version produced your best model? What preprocessing steps did you apply? How did you handle class imbalances?

This is why documentation during training isn’t optional—it’s your insurance policy. Track data transformations, record which features were selected or dropped, and log the exact dataset versions feeding each training run. Modern experiment tracking tools can automate much of this, capturing everything from data checksums to transformation pipelines, ensuring you can always trace back from model to source.

Deployment and Monitoring

Deploying your AI model isn’t the finish line—it’s actually where a new chapter begins. Once in production, your model encounters real-world data that may differ significantly from your training set. User behaviors shift, market conditions change, and data distributions drift over time. This is precisely why data lineage remains essential post-deployment.

Imagine your fraud detection model suddenly starts flagging legitimate transactions. Without lineage tracking, you’re left guessing: Did the input data format change? Are you receiving data from a new source? Has a preprocessing step been altered? With proper lineage documentation, you can quickly trace back through the data pipeline to identify exactly what changed and when.

Continuous monitoring paired with lineage tracking creates a safety net. You’ll spot data quality issues before they cascade into model failures, maintain audit trails for regulatory compliance, and enable rapid debugging when issues arise. Modern monitoring tools can automatically log data transformations and alert you to anomalies, making lineage management an ongoing, streamlined process rather than a manual burden.

The Hidden Costs of Ignoring Data Lineage

When Models Can’t Be Reproduced

Imagine a data science team at a retail company that developed a customer churn prediction model with 92% accuracy. Six months later, when they tried to recreate it for an updated version, they hit a wall. The model’s performance had mysteriously dropped to 78%, and nobody could figure out why.

The problem? They hadn’t tracked their data versions or transformations. The team couldn’t remember which customer dataset they’d used—was it from January or March? Had they filtered out customers with less than three purchases, or was it five? What about those feature engineering steps someone mentioned in a casual Slack message?

Without proper data lineage tracking, they were essentially flying blind. They spent three weeks trying different combinations of data sources and preprocessing steps, burning through budget and missing their deployment deadline. Eventually, they had to start from scratch, treating it as an entirely new project.

This scenario plays out in organizations daily. When you can’t trace back through your data’s journey—from its original source through every cleaning step, transformation, and feature creation—reproducing successful models becomes nearly impossible. It’s like trying to bake the perfect cake without knowing the exact recipe you used last time.

Compliance Nightmares and Audit Failures

Without proper data provenance tracking, organizations face serious regulatory headaches. In healthcare, imagine an AI diagnostic tool making treatment recommendations. When auditors from the FDA arrive asking “what data trained this model?” and you can’t trace the lineage back to specific datasets, patient records, or preprocessing steps, you’re looking at failed compliance and potential legal action.

Financial institutions encounter similar nightmares. Regulators like the SEC demand transparency in AI-driven trading or loan approval systems. If you can’t demonstrate exactly which data influenced a decision to deny someone a mortgage, you’ve violated fair lending laws. One major bank recently faced a $25 million fine partly because their credit risk models lacked adequate documentation of data sources and transformations.

The problem intensifies with global regulations like GDPR, which requires companies to explain automated decisions affecting individuals. Without lineage tracking, proving your model respects data privacy rights becomes nearly impossible. These compliance failures don’t just cost money—they can shut down entire AI projects or force organizations to rebuild models from scratch with proper tracking mechanisms in place.

The Debugging Black Hole

Picture this: Your sentiment analysis model suddenly starts flagging positive customer reviews as negative. You dive into debugging mode, but here’s the problem—you don’t know which training dataset was used, whether it was the version before or after cleaning, or if someone applied undocumented preprocessing steps.

This is the debugging black hole that teams fall into without proper data tracking. When a model produces biased predictions or accuracy drops in production, you need to trace back through the data’s entire journey. Was there a labeling error? Did the data distribution shift? Were certain demographic groups underrepresented?

Without data lineage, you’re essentially trying to fix a car engine while blindfolded. You might eventually stumble upon the solution, but you’ll waste countless hours exploring dead ends. Teams report spending weeks chasing phantom bugs, only to discover the issue stemmed from a data transformation applied months earlier that nobody documented. The fix itself might take minutes, but finding it becomes an expensive treasure hunt through undocumented changes and tribal knowledge.

How Data Lineage and Provenance Work at Each Lifecycle Stage

Tracking During Data Collection and Ingestion

When data first enters your AI pipeline, think of it like documenting ingredients entering a restaurant kitchen. You need to know exactly what arrived, when it came in, and where it originated.

Start by capturing basic source information: Where did this data come from? Was it a public dataset, customer submissions, web scraping, or sensor readings? Record the exact URL, API endpoint, or database table. This simple step becomes invaluable when you later discover data quality issues and need to trace them back.

Timestamps are your next essential metadata. Record not just when data arrived, but also when it was originally created or generated. These two timestamps can differ significantly. For example, medical records might be collected in January but document patient visits from the previous year.

Document your collection methods thoroughly. Did you use an automated script, manual upload, or third-party integration? Include version numbers for any collection tools or APIs used. If you’re pulling from a database, log the query parameters and filters applied.

Consider capturing data volume metrics too: how many records, file sizes, and any sampling rates applied. This helps you understand if sudden changes in model performance correlate with shifts in incoming data characteristics. Finally, record who initiated the collection and their authorization level, establishing an audit trail that satisfies both debugging needs and compliance requirements.

Managing Lineage Through Data Transformation

Data transformations are where much of the “magic” happens in AI projects, but they’re also where lineage can easily break down. Every time you clean messy data, engineer new features, or normalize values, you’re creating a new version of your dataset that needs documentation.

Think of it like cooking: if someone asks how you made that delicious dish, you need to recall not just the ingredients but every step you took. Did you sauté the onions first? What temperature? For how long?

Start by documenting your transformation pipeline in code rather than applying manual changes. Tools like Apache Airflow or Prefect let you create workflows where each transformation step is explicitly defined. For simpler projects, even a well-commented Jupyter notebook works, as long as you version it properly.

Feature engineering deserves special attention. When you create a new feature by combining existing ones, document the logic clearly. For example, if you create an “engagement_score” by multiplying “time_spent” by “clicks,” record this formula in a central data dictionary.

Modern platforms like DVC (Data Version Control) and MLflow automatically track preprocessing steps alongside your code. They create a clear audit trail showing exactly how raw data became training-ready data. This becomes invaluable when your model behaves unexpectedly, and you need to trace back through every transformation to find the issue.

Provenance in Model Training and Versioning

When you train a machine learning model, you’re essentially creating a snapshot of a specific moment in time—but without proper tracking, that moment becomes impossible to recreate. Provenance in model training connects each trained model back to its exact origins: which version of the dataset was used, what hyperparameters were set, and which experiment configuration produced those results.

Think of it like a recipe card that doesn’t just list ingredients, but specifies exactly where each ingredient came from, the brand of flour used, and even the oven temperature down to the degree. This level of detail becomes critical when your model performs unexpectedly. Did accuracy drop because you changed the learning rate, or because the training data was updated? Without provenance tracking, you’re left guessing.

Modern ML platforms automatically capture this information through experiment tracking tools. Every training run logs the dataset version ID, model architecture, hyperparameters like learning rate and batch size, and even the random seed used for initialization. This creates a complete audit trail.

The practical benefit? You can reproduce any model exactly, compare experiments side-by-side to understand what changes improved performance, and quickly roll back to previous versions if problems arise. For teams working under regulatory requirements, this documentation proves how models were developed and validates their reliability.

Maintaining the Trail in Production

Once your AI model is live and making real decisions, the hard work of maintenance begins. Think of it like tending a garden—you can’t just plant and walk away.

Production environments constantly evolve. Your model trained on last quarter’s data might struggle with this quarter’s patterns, a phenomenon called data drift. Imagine a fraud detection system trained before a global pandemic—shopping behaviors changed dramatically overnight, making historical patterns less relevant. Without tracking where predictions come from and what data influenced them, you’re flying blind.

Effective production maintenance requires three core practices. First, continuously monitor incoming data against your training dataset. Set up automated alerts when statistical properties shift beyond acceptable thresholds. Second, maintain detailed logs of every prediction—which model version generated it, what input features were used, and when it occurred. This prediction provenance becomes invaluable when investigating errors or unexpected outcomes.

Finally, establish clear processes for model updates. When you retrain with fresh data, document what changed and why. Create comparison reports showing performance differences between versions. This audit trail proves essential for regulatory compliance and helps your team understand model behavior over time.

The beauty of robust lineage tracking is that debugging becomes detective work rather than guesswork. When something goes wrong, you can trace backwards through the exact data pipeline that produced the problematic prediction, identifying precisely where issues originated.

Practical Tools and Approaches for Getting Started

Simple Practices You Can Start Today

You don’t need fancy tools to start tracking your data’s journey today. Begin with a simple practice: create a standardized documentation template for every dataset you use. Include fields like source location, collection date, who processed it, and what transformations were applied. Think of it as a passport for your data—stamping each checkpoint along its journey.

Next, adopt consistent naming conventions for your files and models. Instead of “model_final_v2_REAL.pkl,” use descriptive names like “customer_churn_model_2024-01-15_training-data-v3.pkl.” This simple habit makes it immediately clear what data went into which model version.

For version control, start using basic Git practices even if you’re working alone. Commit your code with meaningful messages that explain what changed and why. For example: “Added data cleaning step to remove duplicate customer records—affects 3% of training set.” These messages become your project’s story, making it easy to trace decisions months later.

Finally, maintain a simple spreadsheet or markdown file that maps your datasets to the models they trained. This low-tech lineage tracker takes minutes to update but saves hours when you need to reproduce results or investigate unexpected model behavior.

Popular Tools Worth Exploring

If you’re just starting your journey in AI development, several beginner-friendly tools can help you manage data lineage and model provenance without requiring a PhD in computer science.

MLflow has become a favorite among practitioners for its straightforward approach to tracking experiments. Think of it as a detailed lab notebook for your AI projects. It automatically records which datasets you used, what parameters you tweaked, and how well your models performed. The best part? It takes just a few lines of code to get started, and you can visualize everything through a clean web interface.

DVC (Data Version Control) works like Git but for your datasets and models. Remember that time you accidentally overwrote an important dataset? DVC prevents those heart-stopping moments by creating snapshots of your data at different stages. It’s particularly useful when you need to trace back exactly which version of your training data produced a specific model.

For those preferring cloud-based solutions, platforms like Amazon SageMaker, Google Vertex AI, and Azure Machine Learning come with built-in lineage tracking. These services handle much of the complexity behind the scenes, making them excellent choices if you want to focus on building models rather than infrastructure. They automatically capture metadata about your data sources, transformations, and model deployments.

The key is starting simple. Pick one tool that matches your current workflow, experiment with its basic features, and gradually expand your usage as you become more comfortable. You don’t need to master everything at once.

Building a Lineage-First AI Development Culture

Transforming your team’s approach to data lineage doesn’t require a complete overhaul—it starts with making tracking feel like a natural extension of daily work rather than an administrative burden.

For small teams (2-5 people), begin with a simple shared practice: document data sources in a standardized README file for each project. Create a lightweight template that captures the essentials—where data came from, who processed it, and when transformations occurred. Think of it as writing a recipe while you cook, not reconstructing it from memory weeks later. Tools like Git for version control and basic spreadsheets can serve you well at this stage without requiring specialized platforms.

Mid-sized teams (6-20 people) benefit from establishing “lineage champions”—team members who advocate for tracking practices and help others integrate them smoothly. Schedule brief weekly check-ins where team members share one challenge they faced with data tracking and one solution they discovered. This peer learning approach makes the practice collaborative rather than prescriptive. Consider adopting lightweight tools like DVC (Data Version Control) or MLflow that integrate with your existing workflows.

For larger organizations, embed lineage practices into your code review process. Just as you wouldn’t approve code without proper comments, don’t approve data pipelines without documented provenance. Create automated checks that flag missing metadata before models move to production.

The mindset shift happens when teams realize that data lineage isn’t extra work—it’s insurance against future headaches. When a model fails in production, having clear lineage means debugging in hours instead of days. When regulators ask questions, documentation exists immediately.

Start small: pick one upcoming project and treat it as a lineage pilot. Document everything meticulously, then reflect on what felt natural versus forced. Adjust your approach based on real experience, not theoretical ideals. Remember, perfect tracking on one project beats inconsistent tracking across ten projects.

Building reliable AI systems isn’t about perfection from day one. It’s about creating the infrastructure that lets you improve, debug, and trust your models over time. Data lineage and provenance management provide exactly that foundation.

Think of it this way: you wouldn’t build a house without blueprints, yet many teams deploy AI models without knowing precisely how their training data was assembled or transformed. The consequences become apparent when something goes wrong. A model starts making poor predictions, compliance officers ask about data sources, or a critical bug surfaces weeks after deployment. Without lineage tracking, you’re reconstructing history from memory rather than consulting the record.

The good news? You don’t need to overhaul your entire workflow overnight. Start with one manageable piece of your AI development lifecycle. Perhaps you begin by simply logging which dataset version trained your current production model. Or you might implement basic transformation tracking in your data preprocessing pipeline. These small steps compound quickly.

Choose the stage where you feel the most pain right now. Is it model debugging? Start tracking feature engineering steps. Is it compliance pressure? Document your data sources first. Every journey toward mature AI operations begins with a single tracked data point.

The question isn’t whether you can afford to implement data lineage. It’s whether you can afford not to. Your future self, debugging a production incident at midnight, will thank you for starting today.