Graphics Processing Units (GPUs) have revolutionized computing, transforming from simple display controllers into powerful parallel processors that drive everything from gaming to artificial intelligence. At their core, GPU architectures represent a masterpiece of engineering that balances raw computational power with efficient data handling and memory management.

Modern GPU architecture combines thousands of specialized processing cores, sophisticated memory hierarchies, and dedicated hardware accelerators to achieve unprecedented performance in parallel computing tasks. Unlike CPUs, which excel at sequential operations, GPUs are designed from the ground up to handle massive amounts of simultaneous calculations – a capability that has become increasingly crucial in today’s data-driven world.

Understanding GPU architecture isn’t just academic – it’s essential for anyone working in machine learning, computer graphics, or high-performance computing. Whether you’re developing the next breakthrough AI application or optimizing graphics for a cutting-edge game engine, knowing how these silicon powerhouses process data can mean the difference between adequate and exceptional performance.

In this comprehensive guide, we’ll decode the intricate design principles that make GPUs the backbone of modern visual computing and AI acceleration, starting with the fundamental building blocks and progressing to advanced architectural features that enable today’s groundbreaking applications.

Why Traditional GPUs Fall Short for AI

Gaming vs. AI Compute Patterns

Gaming and AI workloads use GPUs in fundamentally different ways. While gaming primarily focuses on rendering complex 3D graphics in real-time, AI tasks involve massive parallel computations on simpler data structures.

In gaming, GPUs process varied instructions that change frame by frame, requiring quick shifts between different operations. The workload is graphics-intensive, demanding high memory bandwidth for texture loading and sophisticated shading operations. Gaming GPUs excel at handling dynamic, unpredictable workloads with minimal latency.

In contrast, AI workloads, particularly deep learning, involve repetitive matrix multiplications and similar mathematical operations performed on large datasets. These operations are more predictable and uniform, allowing for optimized execution patterns. AI-focused GPUs prioritize raw computational throughput and can benefit from specialized hardware like tensor cores, which accelerate specific mathematical operations common in machine learning.

Memory access patterns also differ significantly. Gaming requires random access to large texture files, while AI workloads benefit from more structured, sequential memory access. This fundamental difference has led to the development of specialized GPU architectures optimized for each use case, though many modern GPUs successfully handle both types of workloads.

The Memory Bandwidth Challenge

One of the biggest challenges in modern GPU design is managing the massive data requirements of AI workloads. While GPUs can perform countless calculations per second, they often hit a performance wall known as the memory bandwidth bottleneck. This occurs when the GPU can’t fetch data from memory fast enough to keep its processing units busy.

Think of it like a restaurant kitchen where incredibly fast chefs are constantly waiting for ingredients to arrive from a slow delivery system. No matter how quickly they can cook, they’re limited by the speed at which ingredients reach them. Similarly, advanced memory architectures in AI training have become crucial to address this limitation.

Traditional GPU memory systems weren’t designed with AI workloads in mind, which often require accessing large datasets repeatedly. This has led to innovations like cache hierarchies and high-bandwidth memory (HBM) technologies. However, even these solutions struggle to keep pace with the growing demands of modern AI models, pushing manufacturers to develop increasingly sophisticated memory solutions.

Core Components of AI-Specific GPUs

Tensor Cores Explained

Tensor Cores represent one of the most significant innovations in modern GPU architecture, designed specifically to accelerate AI and machine learning workloads. Unlike traditional CUDA cores, these specialized AI processors are engineered to perform matrix multiplication and convolution operations at unprecedented speeds.

Think of Tensor Cores as mathematical superheroes that can process multiple calculations simultaneously. Where a regular core might need to solve a complex equation step by step, a Tensor Core can tackle entire matrices of numbers in one swift operation. This is particularly crucial for deep learning tasks, where neural networks require millions of matrix calculations to process data.

To put this in perspective, imagine trying to multiply two 4×4 matrices by hand. It would take multiple steps and careful attention to detail. A Tensor Core can perform this operation in a single clock cycle, dramatically reducing the time needed for AI model training and inference.

The latest generations of Tensor Cores can handle various precision formats, from FP32 (32-bit floating-point) to FP16 and even INT8, allowing developers to balance accuracy and performance based on their specific needs. This flexibility makes them invaluable for both training complex AI models and running inference in real-world applications.

For practical applications, this translates to faster image recognition, more responsive natural language processing, and more efficient recommendation systems. Gaming enthusiasts also benefit from Tensor Cores through AI-powered features like DLSS (Deep Learning Super Sampling), which uses AI to upscale gaming graphics with minimal performance impact.

Memory Architecture Innovations

Modern GPUs incorporate innovative memory architectures specifically tailored for AI workloads. At the heart of these advancements is HBM (High Bandwidth Memory), which stacks multiple memory layers vertically to achieve unprecedented data transfer speeds. This design is crucial for AI applications that need to process massive datasets quickly.

Think of GPU memory architecture like a well-organized library where data frequently accessed by AI models is stored in special “express checkout” sections. These sections, known as cache hierarchies, ensure that commonly used information is readily available, significantly reducing the time spent fetching data.

One of the most significant innovations is unified memory, which creates a seamless bridge between CPU and GPU memory. This system automatically manages data movement, making it easier for developers to focus on their AI algorithms rather than manual memory management. It’s similar to having a smart assistant who knows exactly where to find and place every piece of information you need.

Memory compression techniques also play a vital role, using advanced algorithms to store more data in less space without compromising access speed. This is particularly important for large AI models that need to maintain vast amounts of parameters in memory.

Recent GPU designs have introduced smart memory prefetching, which predicts what data an AI model will need next and loads it in advance. This predictive capability, combined with specialized memory controllers optimized for AI data patterns, helps maintain consistent performance during complex calculations.

These memory innovations collectively ensure that AI applications can run efficiently, with minimal latency and maximum throughput, making modern GPUs the powerhouse behind today’s advanced AI systems.

Interconnect Technologies

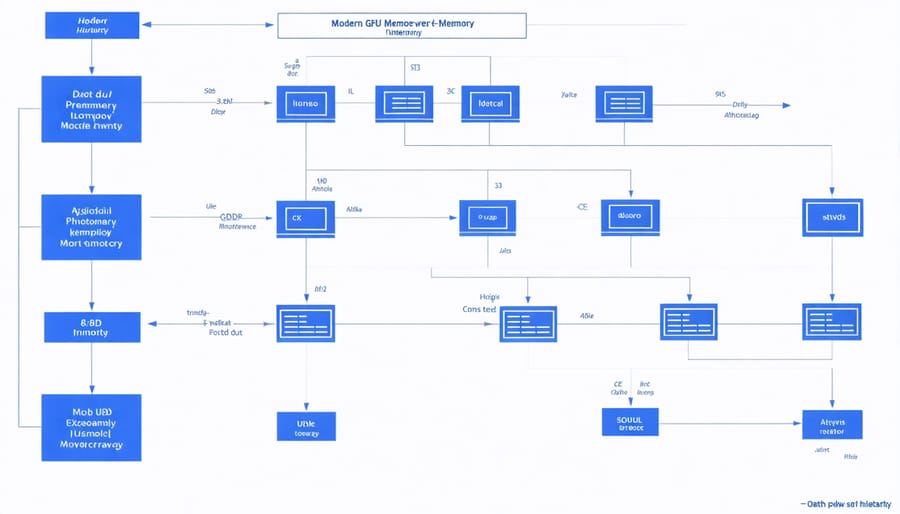

Modern AI GPUs rely on sophisticated interconnect technologies to efficiently move data between their various components. Think of these interconnects as the highway system of the GPU, where data packets are like vehicles traveling between different destinations. The faster and more efficient these highways are, the better the overall performance of the GPU.

At the heart of GPU interconnect technology is the high-bandwidth memory interface, which creates a direct pipeline between processing cores and memory units. This connection is crucial for AI workloads, as neural networks require constant access to large amounts of data. Modern GPUs typically use technologies like HBM2e or GDDR6X, capable of transferring hundreds of gigabytes per second.

The internal fabric of a GPU contains multiple interconnect layers. The primary layer connects different streaming multiprocessors (SMs) to each other, allowing them to share data and workloads efficiently. A secondary layer handles communication between the SMs and memory controllers, while a third layer manages connections to external interfaces like PCIe.

For multi-GPU setups, technologies like NVIDIA’s NVLink provide high-speed connections between multiple graphics cards. This is particularly important in large-scale AI training scenarios where multiple GPUs need to work together. NVLink can transfer data at speeds up to 600 GB/s, significantly faster than traditional PCIe connections.

The latest GPU architectures also implement smart routing algorithms that optimize data paths based on workload patterns. These algorithms can predict where data will be needed next and pre-emptively move it to the appropriate location, reducing latency and improving overall processing efficiency.

Cache coherency protocols play a vital role in maintaining data consistency across different GPU components. These protocols ensure that when one part of the GPU updates data, all other parts see the same updated version, preventing errors and inconsistencies in AI computations.

Real-World Performance Gains

Training Speed Improvements

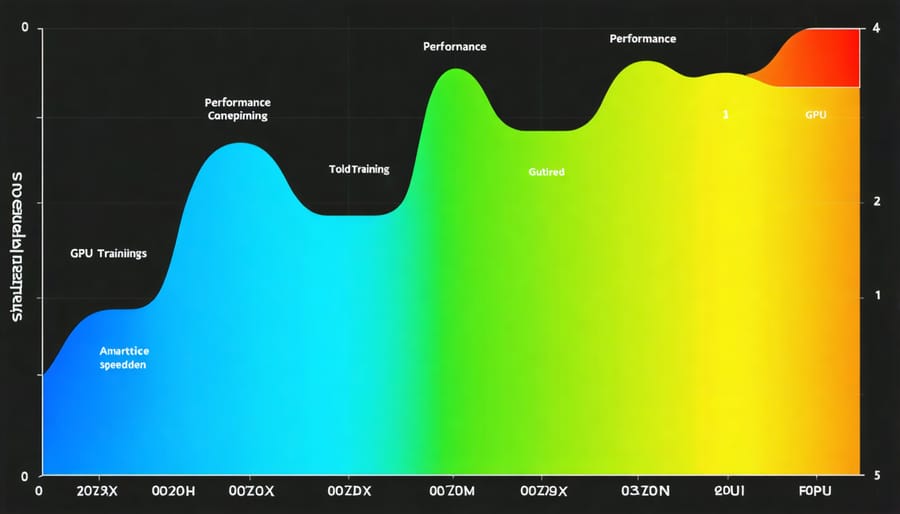

The impact of AI-specific GPU architectures on training speed is nothing short of remarkable. Let’s look at some concrete examples that demonstrate these improvements in real-world scenarios.

Take image classification training on the popular ImageNet dataset. What once took weeks on CPUs can now be completed in just hours using modern AI-optimized GPUs. For instance, training ResNet-50, a common deep learning model, took approximately 14 days on a high-end CPU in 2015. Today, using the latest GPU architecture with Tensor Cores, the same training can be completed in under 2 hours.

These improvements aren’t limited to image processing. Natural language processing has seen similar gains. The BERT language model, which revolutionized text understanding, initially required 4 days of training on conventional hardware. Modern GPU architectures can now train the same model in just 47 minutes.

The speed improvements come from several architectural innovations:

1. Tensor Cores performing multiple calculations simultaneously

2. Optimized memory bandwidth for AI workloads

3. Dedicated hardware for specific AI operations

Real-world benefits extend beyond just faster training times. Companies can now:

– Iterate through different model designs more quickly

– Train on larger datasets

– Deploy updates to production models more frequently

– Reduce energy consumption and computing costs

A practical example from the healthcare sector shows how a medical imaging AI that took 3 weeks to train on traditional hardware now reaches the same accuracy in just 18 hours. This acceleration enables researchers to test more hypotheses and improve their models more rapidly.

For startups and smaller organizations, these improvements mean they can compete with larger players, as they can now train sophisticated AI models without requiring months of compute time or massive server farms. What previously required a full data center can now often be accomplished with a single AI-optimized GPU.

Inference Optimization

In today’s AI landscape, inference optimization has become crucial for deploying machine learning models efficiently. GPU manufacturers have responded by developing specialized architectures that enhance real-world AI deployment, making models faster and more energy-efficient.

These optimized architectures incorporate dedicated tensor cores and intelligent memory management systems specifically designed for AI workloads. For example, NVIDIA’s latest GPUs feature TensorRT, an SDK that optimizes neural networks for inference by combining layers and optimizing kernel selection, resulting in up to 40x faster performance compared to CPU-only platforms.

One key innovation is the introduction of mixed-precision computing, which allows GPUs to perform calculations using both 16-bit and 32-bit floating-point numbers. This approach significantly reduces memory bandwidth requirements while maintaining accuracy, enabling faster inference times for deep learning models.

Hardware vendors have also implemented specialized features like dynamic voltage and frequency scaling (DVFS), which automatically adjusts power consumption based on workload demands. This adaptation ensures optimal performance while minimizing energy usage, making AI deployment more cost-effective in production environments.

For applications requiring custom solutions, FPGA acceleration offers an alternative approach, providing flexibility for specific use cases. However, modern GPUs remain the preferred choice for most inference tasks due to their superior performance-to-cost ratio and extensive software ecosystem support.

Recent developments in GPU architecture also include dedicated hardware blocks for specific AI operations, such as matrix multiplication and convolution. These purpose-built components accelerate common deep learning operations, reducing latency and improving throughput for real-time applications like computer vision and natural language processing.

The future of inference optimization lies in the convergence of hardware and software solutions, with GPU manufacturers continuously developing new architectures that better serve the evolving needs of AI applications while maintaining backward compatibility with existing frameworks and tools.

Future Architectural Trends

Next-Generation Features

The future of GPU architecture is rapidly evolving, with several groundbreaking innovations on the horizon. One of the most exciting developments is the shift towards brain-like computing architectures, which promise to revolutionize how GPUs process AI workloads. These neural-inspired designs will enable more efficient parallel processing and reduced power consumption.

Chiplet technology is gaining momentum, allowing manufacturers to combine multiple smaller dies instead of producing single large chips. This approach not only improves manufacturing yields but also enables more flexible configurations and better thermal management. We’re also seeing the emergence of advanced memory architectures, including HBM3E and future variants, which will significantly boost bandwidth and reduce latency.

Ray tracing capabilities are being enhanced with dedicated hardware units that will make real-time path tracing more accessible, benefiting both gaming and AI rendering applications. Additionally, specialized AI accelerators are being integrated directly into GPU cores, creating hybrid architectures that can handle both traditional graphics and machine learning workloads more efficiently.

Variable rate processing is evolving to become more granular, allowing GPUs to allocate resources more intelligently based on workload demands. This advancement, combined with improved power management features, will lead to more efficient processing and better performance per watt ratios.

Industry Developments

Major GPU manufacturers are constantly innovating to meet the growing demands of AI and machine learning workloads. NVIDIA, the market leader, has evolved its architecture from Pascal through Volta, Turing, and Ampere to the current Ada Lovelace generation, each iteration bringing significant improvements in AI processing capabilities. These advancements include enhanced tensor cores, larger memory bandwidth, and more efficient power management systems.

AMD has responded with its RDNA and CDNA architectures, specifically designing the latter for data center and AI applications. Their latest innovations focus on matrix multiplication engines and improved memory hierarchies to better handle deep learning tasks. Intel has also entered the AI GPU space with its Arc and Ponte Vecchio architectures, offering new approaches to AI-specific hardware solutions.

A notable trend is the shift toward specialized processing units within GPUs. Manufacturers are incorporating dedicated tensor processing units, ray-tracing cores, and AI accelerators alongside traditional CUDA cores. This hybrid approach allows GPUs to efficiently handle diverse workloads while maintaining their primary advantage in parallel processing.

The industry is also seeing increased focus on power efficiency and scalability, with new designs emphasizing better performance per watt and improved interconnect technologies for multi-GPU configurations. These developments are crucial as AI models continue to grow in size and complexity.

Understanding GPU architecture is essential in today’s technology-driven world, particularly as AI and machine learning continue to shape our digital landscape. Throughout this guide, we’ve explored how modern GPUs transform complex calculations into efficient parallel processes, making them indispensable for everything from gaming to deep learning applications.

Key takeaways include the fundamental role of CUDA cores in parallel processing, the importance of memory hierarchy in maintaining fast data access, and how specialized tensor cores accelerate AI workloads. We’ve seen how the interplay between these components creates a powerful ecosystem for handling intensive computational tasks.

For those looking to leverage GPU technology, remember that choosing the right GPU depends on your specific needs. Gaming enthusiasts might focus on rasterization capabilities, while AI developers should prioritize tensor core count and memory bandwidth. Understanding these architectural elements helps make informed decisions about hardware investments.

As GPU technology continues to evolve, we’re seeing increasingly specialized architectures designed for specific workloads. Whether you’re a developer, content creator, or technology enthusiast, staying informed about these advances will help you maximize the potential of your GPU-accelerated applications.

Remember that while GPU architecture may seem complex, its fundamental purpose remains simple: to process massive amounts of data in parallel, enabling the sophisticated applications that power our modern digital experiences.