Imagine standing at the cusp of the most significant technological revolution since the internet. Generative AI has erupted onto the global stage, transforming how we create, work, and interact with technology. From DALL-E’s artistic creations to ChatGPT’s human-like conversations, these AI systems aren’t just processing information—they’re generating entirely new content that rivals human creativity.

In just the past few years, generative AI has evolved from an experimental technology into a force that’s reshaping industries, from healthcare to creative arts. Unlike traditional AI systems that simply analyze data, generative models can create everything from computer code and marketing copy to photorealistic images and symphonies. This leap from analytical to creative AI represents a fundamental shift in how machines augment human capabilities.

The impact is staggering: businesses are reinventing their workflows, artists are discovering new forms of expression, and researchers are unlocking possibilities that seemed like science fiction just decades ago. As we witness this technological renaissance, one thing becomes clear: generative AI isn’t just another innovation—it’s the beginning of a new era where the boundaries between human and machine creativity increasingly blur.

This revolution isn’t just changing what computers can do; it’s fundamentally altering what it means to be creative in the digital age.

The Foundation Years: 1950s-1960s

Logic Theorist and Early Pattern Recognition

The foundation of today’s generative AI revolution can be traced back to 1956, when the Logic Theorist became the first AI program to mimic human problem-solving skills. Created by Allen Newell, Herbert A. Simon, and Cliff Shaw, this groundbreaking system could prove mathematical theorems and laid the groundwork for how machines process logical relationships.

Following this breakthrough, researchers developed early pattern recognition systems that could identify simple shapes and characters. These initial attempts at machine perception, while primitive by today’s standards, established fundamental principles that modern neural networks still use, such as the concept of weighted connections and learning from examples.

The Logic Theorist’s approach to breaking down complex problems into smaller, manageable parts remains influential in current AI architectures. Its success inspired the development of more sophisticated programs throughout the 1960s, including General Problem Solver (GPS) and ELIZA, which demonstrated basic natural language processing capabilities.

These early innovations established critical concepts that contemporary generative AI builds upon: the ability to analyze patterns, make logical connections, and generate new outputs based on learned relationships. While today’s systems are exponentially more powerful, they still rely on these foundational principles of problem decomposition and pattern recognition first explored by these pioneering programs.

ELIZA: The First Chatbot Pioneer

In 1966, MIT computer scientist Joseph Weizenbaum created ELIZA, a groundbreaking program that would forever change how we think about human-computer interaction. ELIZA, named after the character Eliza Doolittle from “Pygmalion,” was designed to simulate a psychotherapist by using pattern matching and simple response generation techniques.

What made ELIZA remarkable was its ability to engage in seemingly meaningful conversations through natural language processing, despite having no true understanding of the dialogue. The program worked by recognizing keywords in user inputs and responding with pre-programmed patterns, often reflecting questions back to the user in a therapy-like manner.

Perhaps most fascinating was how users formed emotional connections with ELIZA, even when they knew they were talking to a computer program. This phenomenon, later termed the “ELIZA effect,” demonstrated how humans could attribute understanding and empathy to artificial conversations.

ELIZA’s influence on modern chatbots is profound. While today’s AI language models use vastly more sophisticated techniques, they still build upon ELIZA’s core principles of pattern recognition and contextual response generation. From customer service chatbots to AI assistants like ChatGPT, these modern tools carry forward ELIZA’s legacy of making human-computer interaction more natural and accessible.

The AI Winter Survivors: 1970s-1980s

Expert Systems Revolution

Expert systems marked a significant milestone in AI development during the 1970s and 1980s, representing one of the first practical applications of artificial intelligence in real-world decision-making. These systems used if-then rules and knowledge bases to mimic human expert reasoning in specific domains, from medical diagnosis to equipment maintenance.

XCON, developed by Digital Equipment Corporation in 1978, became one of the first commercially successful expert systems, helping configure computer systems and saving the company millions annually. Similarly, MYCIN, created at Stanford University, demonstrated how AI could assist doctors in diagnosing bacterial infections and recommending antibiotics.

However, these early systems faced limitations. They relied heavily on manually coded rules and couldn’t learn from new data or adapt to changing situations. Each rule had to be explicitly programmed, making maintenance complex and scaling difficult. Despite these challenges, expert systems laid crucial groundwork for modern AI by establishing frameworks for knowledge representation and automated reasoning.

The transition from rule-based systems to modern machine learning approaches happened gradually through the 1990s and 2000s. Instead of following pre-programmed rules, newer systems could learn patterns from data, leading to more flexible and adaptive decision-making capabilities. This evolution ultimately contributed to the development of today’s generative AI models, which can not only make decisions but also create new content based on learned patterns.

The legacy of expert systems continues to influence modern AI architecture, particularly in areas where explicit reasoning and decision transparency are crucial, such as healthcare and financial systems.

Neural Networks Resurgence

The resurgence of neural networks marks a pivotal moment in artificial intelligence history, transforming from a once-dismissed concept into the backbone of today’s generative AI revolution. While neural networks were first conceptualized in the 1940s, they remained largely theoretical until the 1980s, when backpropagation emerged as a breakthrough training method.

The game-changing development came with Geoffrey Hinton’s work on deep learning in the early 2000s. His research demonstrated that multiple layers of artificial neurons could learn increasingly complex patterns, laying the groundwork for modern neural network architectures that power today’s generative models.

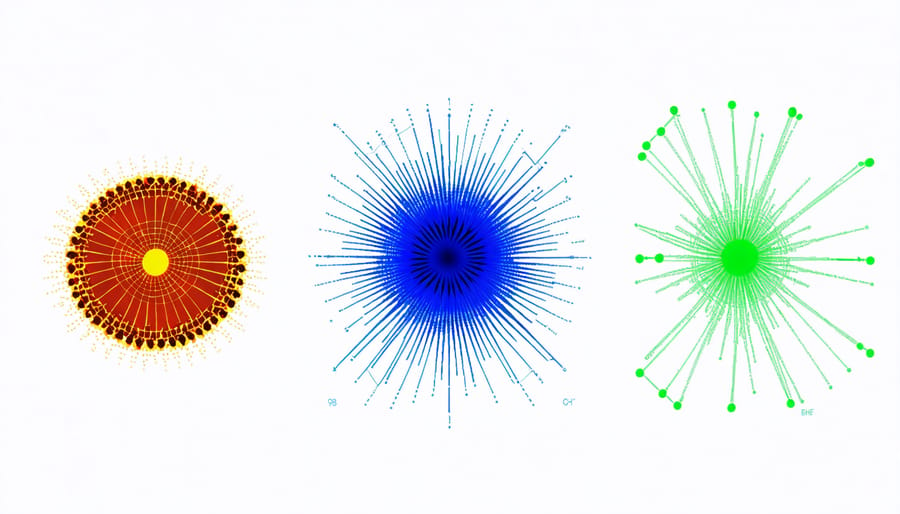

Three key factors catalyzed this renaissance: the explosion of available data, significant improvements in computing power (particularly GPUs), and refined training algorithms. These elements combined to overcome the historical limitations that had previously held neural networks back.

The introduction of convolutional neural networks (CNNs) revolutionized image processing, while recurrent neural networks (RNNs) and later transformer architectures dramatically improved natural language processing. These advances set the stage for sophisticated generative models like GANs (Generative Adversarial Networks) and modern language models.

Today’s neural networks can process and generate content with unprecedented sophistication, learning patterns from vast datasets to create everything from photorealistic images to coherent text. This foundation continues to evolve, pushing the boundaries of what’s possible in generative AI.

The Digital Renaissance: 1990s

Deep Learning Foundations

The foundation of today’s generative AI revolution lies in the remarkable evolution of deep learning algorithms over the past decade. These sophisticated neural networks draw inspiration from the human brain’s structure, using multiple layers of interconnected nodes to process and learn from vast amounts of data.

Key breakthroughs in neural network architectures, particularly the development of transformers and attention mechanisms, have revolutionized how AI systems understand and generate content. These innovations allow machines to capture complex patterns and relationships within data, enabling them to create everything from realistic images to coherent text.

The rise of more powerful computing hardware, especially Graphics Processing Units (GPUs), has been crucial in making deep learning practical. These processors can handle millions of calculations simultaneously, making it possible to train increasingly complex models on massive datasets.

Another vital development has been the emergence of transfer learning, where models pre-trained on large datasets can be fine-tuned for specific tasks. This approach has dramatically reduced the resources needed to create effective AI systems, democratizing access to advanced AI capabilities.

Together, these foundational elements have created a perfect storm of technological capability, leading to the current explosion in generative AI applications that can create, modify, and enhance content in ways previously thought impossible.

Data Processing Innovations

The exponential growth in computing power and sophisticated data handling capabilities has been instrumental in bringing generative AI to life. Moore’s Law’s continued relevance meant that processing power doubled roughly every two years, enabling AI systems to handle increasingly complex tasks. By the 2010s, the emergence of Graphics Processing Units (GPUs) and specialized AI processors revolutionized how machines could process vast amounts of data simultaneously.

Cloud computing platforms transformed data storage and processing, making it possible to train AI models on unprecedented amounts of information. What once required massive dedicated server farms became accessible through scalable cloud resources, democratizing AI development. The introduction of distributed computing frameworks like Apache Hadoop and Spark enabled efficient processing of big data, essential for training sophisticated AI models.

Data preprocessing techniques also evolved significantly. Advanced methods for cleaning, normalizing, and augmenting data improved the quality of training datasets. The development of efficient data pipelines and automated preprocessing tools streamlined the handling of diverse data types – from text and images to audio and video.

Perhaps most critically, breakthrough architectures like transformers changed how AI systems process sequential data. These innovations, combined with improved data compression techniques and optimized training algorithms, made it possible to create the large language models that power today’s generative AI applications, while requiring less computational resources than their predecessors.

Bridge to Modern AI: Late 20th Century

Pattern Recognition Breakthroughs

Pattern recognition emerged as a cornerstone of modern AI image generation through several groundbreaking developments. In the 1950s and 1960s, Frank Rosenblatt’s Perceptron marked the first successful attempt at machine-based pattern recognition, laying the groundwork for neural networks. This innovation allowed computers to identify simple shapes and patterns, though with limited accuracy.

The 1980s brought significant advances with the introduction of Convolutional Neural Networks (CNNs). Developed by Yann LeCun and his colleagues, CNNs revolutionized how machines process visual information by mimicking the human visual cortex’s structure. These networks excelled at identifying patterns in images by breaking them down into smaller, manageable segments.

A major breakthrough came in 2012 with AlexNet, which demonstrated unprecedented accuracy in image recognition during the ImageNet competition. This success sparked renewed interest in deep learning approaches to pattern recognition. The system’s ability to identify complex patterns in massive datasets became fundamental to modern image generation techniques.

These historical developments directly influenced today’s generative AI systems, particularly in how they analyze and understand visual patterns. Contemporary tools like DALL-E and Midjourney build upon these pattern recognition principles, enabling them to generate highly realistic and creative images from text descriptions.

Natural Language Processing Evolution

Natural Language Processing (NLP) has undergone a remarkable transformation since its inception in the 1950s. Early systems relied on rule-based approaches, where programmers manually coded grammar and vocabulary rules. This method, while groundbreaking for its time, proved limited in handling the complexity and nuances of human language.

The 1980s and 1990s saw a shift toward statistical methods, where computers began learning language patterns from large text databases. This evolution laid the groundwork for modern language models, but the real breakthrough came with the introduction of neural networks and deep learning in the 2000s.

A pivotal moment arrived with the development of word embeddings like Word2Vec in 2013, which helped computers understand contextual relationships between words. The transformer architecture, introduced in 2017, revolutionized NLP by enabling models to process entire sequences of text simultaneously, rather than word by word.

This technological progression culminated in the creation of powerful language models like GPT (Generative Pre-trained Transformer) and BERT, which can understand context, generate human-like text, and perform various language tasks with unprecedented accuracy. These advances have made possible the current wave of generative AI tools that can write, translate, and even engage in natural conversations with users.

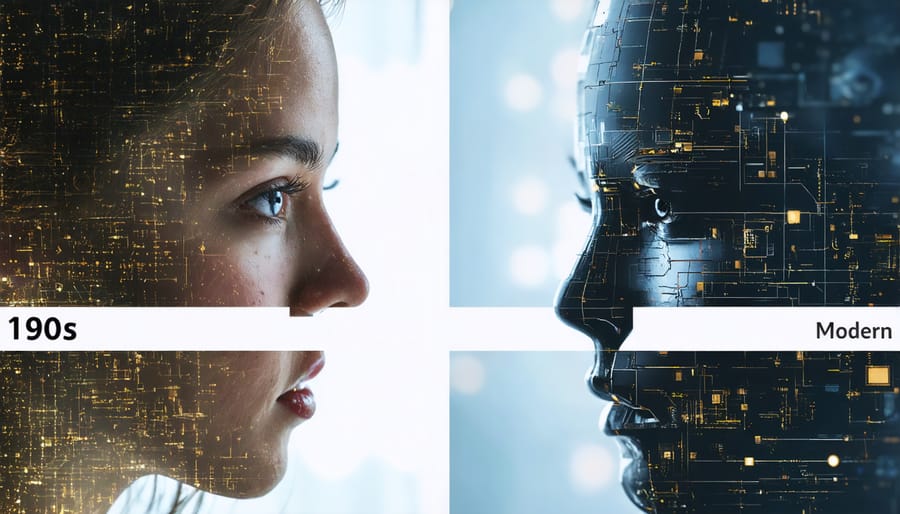

As we reflect on the journey from early computing to today’s generative AI revolution, we can see how each breakthrough has built upon previous innovations. The pattern-recognition capabilities developed in the 1950s laid the groundwork for modern machine learning. Neural networks, initially conceptualized decades ago, have evolved into the sophisticated transformers powering ChatGPT and DALL-E.

What makes the current generative AI revolution particularly remarkable is how it combines and enhances these historical developments. Today’s systems can create, iterate, and even understand context in ways that would have seemed like science fiction to early AI pioneers. They’ve transformed from simple pattern-matching tools into creative partners capable of generating human-like text, realistic images, and even complex code.

Looking ahead, the possibilities are both exciting and challenging. As generative AI continues to evolve, we’re likely to see even more sophisticated applications across industries – from personalized education to advanced scientific research. The foundation built by decades of AI development suggests we’re just beginning to unlock the potential of these technologies.

The key lesson from this historical perspective is clear: innovation in AI is cumulative and accelerating. Each breakthrough opens new doors, and the pace of development shows no signs of slowing. As we continue this journey, our responsibility is to shape these powerful tools in ways that benefit society while addressing the ethical considerations they raise.