No, current AI systems like ChatGPT, Midjourney, and the machine learning models powering today’s technology do not use quantum computing. They run entirely on classical computers—the same type of hardware in your laptop or smartphone, just scaled up massively in data centers.

This misconception arises because both artificial intelligence and quantum computing dominate tech headlines, often mentioned in the same breath as revolutionary technologies. When people see news about quantum breakthroughs alongside AI achievements, it’s natural to assume they’re working together. They aren’t, at least not yet.

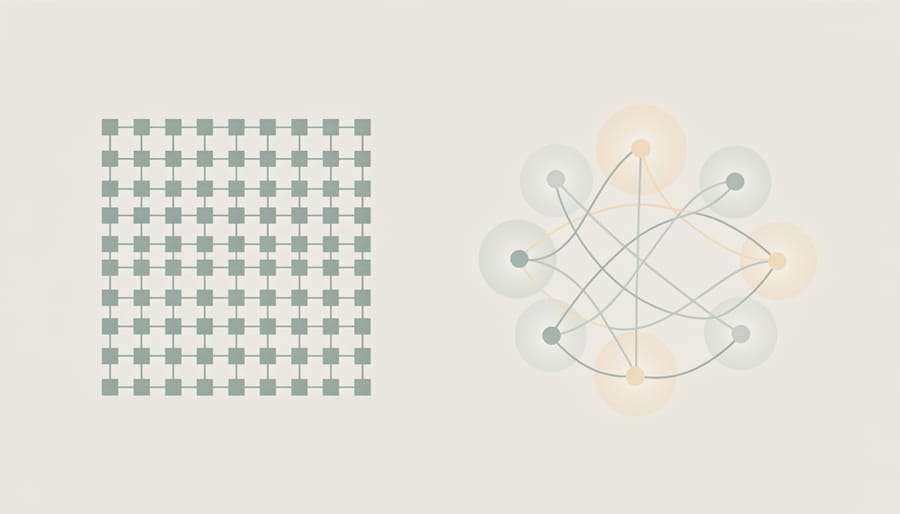

Understanding the distinction matters because it clarifies what’s happening now versus what might happen in the future. Today’s AI revolution—from language models to image generators—relies on conventional processors and graphics cards running billions of calculations through neural networks. These systems have achieved remarkable results using classical computing power alone, trained on enormous datasets through methods developed over decades.

Quantum computing, meanwhile, remains largely experimental. While quantum computers exist in research labs and specialized facilities, they’re nowhere near ready to replace or enhance the AI tools you use daily. The technology operates on fundamentally different principles, using quantum mechanics to process information in ways classical computers cannot.

The real question isn’t whether AI currently uses quantum computing, but whether these technologies might converge in meaningful ways. Researchers are exploring this frontier, investigating whether quantum computers could eventually accelerate certain AI tasks or unlock entirely new capabilities. That potential relationship, however, belongs firmly in the realm of future possibilities rather than present reality.

What Actually Powers Today’s AI Systems

The Hardware Behind Modern Machine Learning

Today’s artificial intelligence runs on powerful but fundamentally classical computing hardware. The workhorses of modern machine learning are Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), and traditional Central Processing Units (CPUs), each playing a distinct role in bringing AI to life.

GPUs, originally designed to render video game graphics, have become the gold standard for training AI models. Their architecture allows them to perform thousands of calculations simultaneously, making them perfect for the matrix operations that neural networks require. When you interact with ChatGPT or see AI-generated images, GPUs likely processed those tasks.

TPUs represent Google’s answer to AI-specific needs. These specialized AI hardware chips are custom-built exclusively for machine learning operations, offering even faster processing for certain AI workloads. They power many of Google’s AI services, from search improvements to language translation.

CPUs, the general-purpose processors in every computer, handle the orchestration work. They manage data flow, coordinate between different hardware components, and run smaller AI models efficiently.

These classical processors have proven remarkably capable. They’ve enabled breakthrough applications in image recognition, natural language processing, autonomous vehicles, and medical diagnosis. Their parallel processing capabilities and continuous improvements in efficiency mean they handle current AI demands effectively. The question isn’t whether they work, but rather whether quantum computing might eventually enhance specific AI tasks beyond what classical hardware can achieve.

Why Classical Computing Still Works Fine

Think of AI as a highly efficient accountant working through millions of spreadsheets. It’s doing a lot of calculations, but they’re straightforward number-crunching tasks that regular computers handle exceptionally well. When ChatGPT generates a response or when Netflix recommends your next binge-worthy show, these systems are performing what we call “classical computation”—the same fundamental operations your laptop executes, just at a much larger scale.

Current AI applications rely on pattern recognition and statistical analysis. Imagine teaching a child to recognize dogs by showing them thousands of photos. Classical computers excel at this repetitive learning process, systematically adjusting weights and parameters until patterns emerge. They process these tasks sequentially or in parallel using graphics processing units (GPUs), which are essentially specialized chips designed for handling multiple similar calculations simultaneously.

The reason quantum computing isn’t necessary for today’s AI is simple: we’re not facing the specific types of problems quantum computers solve best. Classical computers may take time and consume significant energy, but they reliably deliver results for tasks like language processing, image recognition, and predictive analytics without needing quantum mechanics’ strange properties.

Understanding Quantum Computing in 5 Minutes

How Quantum Computers Think Differently

To understand why quantum computers are fundamentally different, imagine you’re trying to find a specific book in a massive library. A classical computer would search one aisle at a time, checking each shelf methodically. A quantum computer, however, could explore multiple aisles simultaneously, dramatically speeding up the search.

This magical-sounding ability comes from three quantum principles. First, qubits are the quantum version of traditional bits. While a regular bit is like a light switch that’s either on (1) or off (0), a qubit is more like a coin spinning in the air. Until you catch it and look, it exists in both states at once. This property is called superposition.

Think of it this way: if you have three regular bits, you can represent one number at a time from 0 to 7. But three qubits in superposition can represent all eight numbers simultaneously. With just 300 qubits, you could theoretically represent more states than there are atoms in the universe, which explains the mind-boggling potential power.

The second principle, entanglement, is even stranger. When qubits become entangled, they form an inseparable connection. Measuring one instantly affects the others, regardless of distance. Imagine having two magic dice: when you roll one and get a six, the other automatically shows a six too, even if it’s across the room. This interconnection allows quantum computers to process relationships between data in ways classical computers cannot.

These properties mean quantum computers don’t just work faster at the same tasks. They think differently, opening possibilities for solving problems that would take classical computers millennia to crack.

What Quantum Computers Are Actually Good At

Quantum computers shine in specific scenarios that classical computers struggle with. Think of them as specialized tools rather than all-purpose machines. They excel at optimization problems, where you need to find the best solution among countless possibilities—imagine planning the most efficient delivery route for thousands of packages across a city, but exponentially more complex.

They’re also powerful at simulating molecular behavior and chemical reactions. While your laptop would take years to model how certain molecules interact, a quantum computer can do it much faster because it operates on quantum principles similar to the molecules themselves.

Additionally, quantum computers are excellent at factoring large numbers, which has implications for cryptography and security. They can also search through unsorted databases more efficiently than traditional computers using quantum algorithms.

The key pattern here? Quantum computers tackle problems involving massive parallel possibilities or quantum phenomena. They’re not designed for everyday tasks like browsing the web or editing documents. Instead, they address highly specific computational challenges that require exploring many potential solutions simultaneously—which happens to overlap with certain complex AI and machine learning tasks, creating intriguing possibilities for the future.

Where AI and Quantum Computing Could Meet

Quantum Machine Learning: The Research Frontier

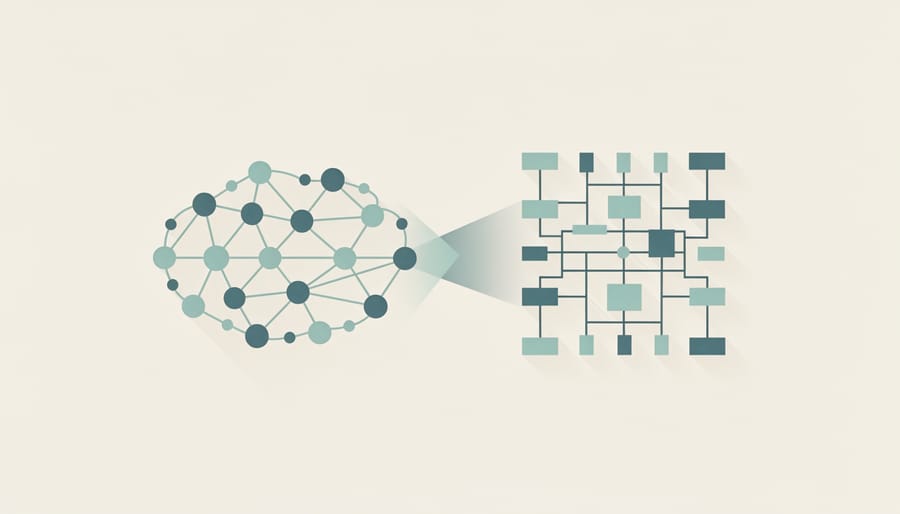

Right now, researchers worldwide are exploring an exciting question: can quantum computers make AI smarter and faster? This emerging field, called quantum machine learning, sits at the cutting edge of both technologies.

Think of it as teaching AI new tricks using quantum mechanics. Scientists are developing quantum neural networks, which work similarly to the neural networks in today’s AI but harness quantum properties like superposition to process information in entirely new ways. Instead of analyzing data one pattern at a time, these quantum versions could potentially evaluate multiple patterns simultaneously.

One particularly promising area involves optimization problems. Imagine you’re planning the most efficient delivery route for thousands of packages across a city. Classical computers struggle with these complex calculations as the number of possibilities explodes exponentially. Quantum algorithms, however, might navigate these massive solution spaces more effectively, finding optimal answers much faster than current methods.

Researchers are also investigating quantum approaches to classification tasks, where AI learns to categorize data like images or text. Early experiments with quantum support vector machines and quantum sampling techniques have shown intriguing results, though they’re still in laboratory settings with small datasets.

The relationship between machine learning in quantum computing works both ways. While scientists explore using quantum computers for AI, they’re also using classical AI to improve quantum computers themselves, creating a fascinating feedback loop of innovation.

However, it’s important to understand that this research remains largely theoretical and experimental. We’re still years away from practical quantum machine learning applications you could use on your smartphone.

Real Problems Quantum AI Might Solve

While quantum AI remains largely experimental, researchers have identified several real-world problems where this combination could deliver breakthrough solutions that classical computers struggle with today.

One of the most promising areas is molecular simulation for drug discovery applications. Imagine trying to predict how a new medicine will interact with proteins in your body. Classical computers have to make educated guesses because calculating all the quantum interactions between molecules is incredibly complex. Quantum computers naturally operate on these same quantum principles, meaning they could simulate molecular behavior with far greater accuracy. Combined with AI’s pattern recognition capabilities, this could dramatically reduce the time it takes to develop new medications from years to months.

Optimization problems present another compelling use case. Think about logistics companies trying to find the most efficient delivery routes for thousands of packages across a city, or financial institutions managing investment portfolios with countless variables. These problems grow exponentially complex as you add more factors. Quantum algorithms could explore multiple solutions simultaneously, while AI learns which types of solutions work best for specific scenarios.

Pattern recognition in enormous datasets also stands to benefit significantly. When processing massive datasets like climate models with billions of variables or analyzing genetic information from millions of people, quantum-enhanced AI could identify subtle patterns that classical systems miss entirely. This could lead to earlier disease detection, better weather predictions, and deeper insights into complex systems.

The key advantage in all these scenarios is that quantum computing excels at exploring vast possibility spaces, while AI excels at learning from data and making predictions based on discovered patterns.

The Reality Check: Why Quantum AI Isn’t Here Yet

The Technical Roadblocks

Building quantum computers powerful enough for AI isn’t just difficult—it’s one of the biggest engineering challenges humanity has ever tackled. To understand why, let’s break down the main obstacles.

The first problem is quantum decoherence. Remember how quantum computers rely on qubits existing in multiple states simultaneously? This delicate quantum state is incredibly fragile. Even the tiniest environmental disturbance—a stray photon, a vibration, or a temperature fluctuation—can cause qubits to “decohere,” meaning they lose their quantum properties and collapse into regular bits. Imagine trying to balance a pencil on its tip while someone’s shaking the table. That’s essentially what researchers face, except the “shaking” comes from the universe itself. Most quantum computers today can only maintain coherence for microseconds or milliseconds before errors creep in.

This leads directly to the second challenge: error correction. Classical computers rarely make mistakes, but quantum computers are error-prone by nature. To fix this, scientists use quantum error correction, which requires multiple physical qubits to create one reliable “logical qubit.” Current estimates suggest you might need 1,000 physical qubits just to create one error-corrected logical qubit. When AI applications might eventually need millions of logical qubits, you can see the scale problem.

Finally, there’s the qubit quantity challenge. Today’s quantum computers have dozens to a few hundred qubits—nowhere near enough for practical AI applications. Scaling up while maintaining stability and coherence remains an unsolved puzzle.

The Timeline Question

So when can we expect quantum-powered AI to arrive? The honest answer is that we’re still years, possibly decades, away from practical applications.

Most experts predict that quantum computers capable of genuinely enhancing AI workloads won’t emerge until the 2030s at the earliest. Right now, even the most advanced quantum computers from companies like IBM and Google can only handle around 1,000 qubits, and they’re extremely prone to errors. To run meaningful AI algorithms, we’d need machines with millions of stable, error-corrected qubits.

Think of it like the early days of classical computing. The first computers filled entire rooms and could barely perform basic calculations. Today’s quantum computers are at a similar stage. They’re impressive laboratory demonstrations, but not yet ready to replace the powerful graphics processing units that currently train AI models.

However, progress is accelerating. Research labs worldwide are making breakthroughs in qubit stability and error correction. Some specific applications, like optimizing certain machine learning tasks or simulating molecular structures for drug discovery AI, might see quantum advantages within the next five to ten years.

The key takeaway? While quantum AI remains largely theoretical today, it’s a matter of when, not if, these technologies will converge in meaningful ways.

Who’s Actually Working on Quantum AI

While quantum-AI might sound like science fiction, several major players are already investing heavily in making this combination a reality.

IBM has been at the forefront of this research, offering public access to real quantum computers through their IBM Quantum Experience platform. Their researchers are actively exploring how quantum computers could accelerate machine learning tasks, particularly in areas like optimization problems. In 2023, they demonstrated quantum algorithms that could potentially speed up certain AI calculations, though we’re still in the experimental phase.

Google made headlines in 2019 with their quantum supremacy claim, where their Sycamore processor solved a specific problem faster than classical supercomputers. Their quantum AI lab continues exploring how quantum computing might enhance neural network training and pattern recognition. However, it’s important to note that these achievements involve highly specialized tasks, not the everyday AI applications we currently use.

Microsoft takes a different approach through Azure Quantum, their cloud-based platform that combines classical and quantum computing resources. They’re developing quantum-inspired algorithms that run on regular computers but borrow concepts from quantum mechanics to solve complex problems more efficiently. This hybrid strategy aims to deliver practical benefits sooner while we wait for more powerful quantum hardware.

In academia, institutions like MIT, Stanford, and the University of Waterloo are pushing boundaries with research projects exploring quantum machine learning algorithms. These labs are tackling fundamental questions about which AI problems might actually benefit from quantum acceleration.

Startups like Rigetti Computing and IonQ are also entering the space, building quantum hardware and developing software specifically designed for machine learning applications.

The reality check? All these organizations are conducting research and running experiments. None have deployed quantum computers to power consumer-facing AI products yet. The work is impressive and promising, but we’re still in the early discovery phase, likely years away from practical, everyday applications.

What This Means for You Right Now

So what does all this mean for you today? Let’s break it down based on where you are in your journey with these technologies.

If you’re a student considering career paths, here’s the bottom line: focus on mastering classical AI and machine learning first. The job market is exploding with opportunities in traditional AI, and these skills will remain valuable even if quantum computing eventually enters the picture. Learn Python, understand neural networks, and get comfortable with frameworks like TensorFlow or PyTorch. Quantum computing knowledge is a nice bonus, but it shouldn’t be your primary focus unless you’re specifically pursuing advanced physics or quantum information science degrees.

For professionals already working with AI, you can breathe easy. Your current skills and tools aren’t becoming obsolete anytime soon. Classical computers will continue powering AI applications for at least the next decade. However, it’s worth keeping quantum computing on your radar. Consider it like cloud computing was in 2005: interesting and emerging, but not yet critical to your daily work. Subscribing to a quantum computing newsletter or taking an introductory online course can help you stay informed without derailing your current projects.

If you’re an enthusiast wanting to stay informed, be skeptical of headlines claiming quantum computers will revolutionize AI tomorrow. When you see such claims, ask yourself: Is this about actual deployed technology or laboratory experiments? Does the article explain why quantum would help this specific problem? The real breakthroughs will likely arrive gradually, not as a single dramatic moment.

The practical takeaway for everyone: quantum-enhanced AI is coming, but it’s a marathon, not a sprint. Stay curious, remain skeptical of hype, and keep building your foundation in classical AI.

So, does AI use quantum computing? The straightforward answer is no—not yet. The AI tools you interact with daily, from ChatGPT to Netflix recommendations, all run on classical computers. These traditional machines have proven remarkably capable of powering the current AI revolution, and they’ll continue doing the heavy lifting for the foreseeable future.

That said, the future holds intriguing possibilities. Quantum computing might eventually enhance specific AI applications where it offers genuine advantages, particularly in optimization problems, drug discovery, or complex simulations. However, we’re still in the early experimental stages, and practical quantum-powered AI remains years away from your everyday devices.

The key takeaway? Stay curious but grounded. Don’t believe the hype suggesting quantum computers are secretly powering today’s AI—they’re not. Instead, appreciate the incredible achievements classical computing has already delivered while keeping an eye on emerging quantum research.

As you continue exploring the fascinating world of artificial intelligence, remember that understanding how these technologies actually work today is just as important as imagining their future potential. Keep learning, stay skeptical of sensational claims, and explore the many other dimensions of AI that are actively shaping our world right now.