Symbolic AI, once the cornerstone of early AI breakthroughs, represents humanity’s first systematic attempt to recreate intelligence through explicit rule-based programming. In the 1950s and 1960s, pioneering researchers believed that human intelligence could be reduced to a set of logical rules and symbols, leading to the development of expert systems and formal reasoning engines that could solve complex problems through predefined logical steps.

Unlike modern machine learning approaches that learn patterns from data, symbolic AI relies on human-encoded knowledge and reasoning frameworks. This fundamental difference sparked a revolution in how we approach artificial intelligence, transitioning from purely logical systems to today’s neural networks and hybrid approaches that combine the best of both worlds.

While symbolic AI’s limitations in handling uncertainty and natural language eventually led to the rise of statistical methods, its core principles of explainability and logical reasoning continue to influence contemporary AI development. Understanding this foundational approach is crucial for anyone seeking to grasp the full spectrum of artificial intelligence technologies and their evolution.

The Rise of Symbolic AI: Rule-Based Intelligence

Key Characteristics of Symbolic AI

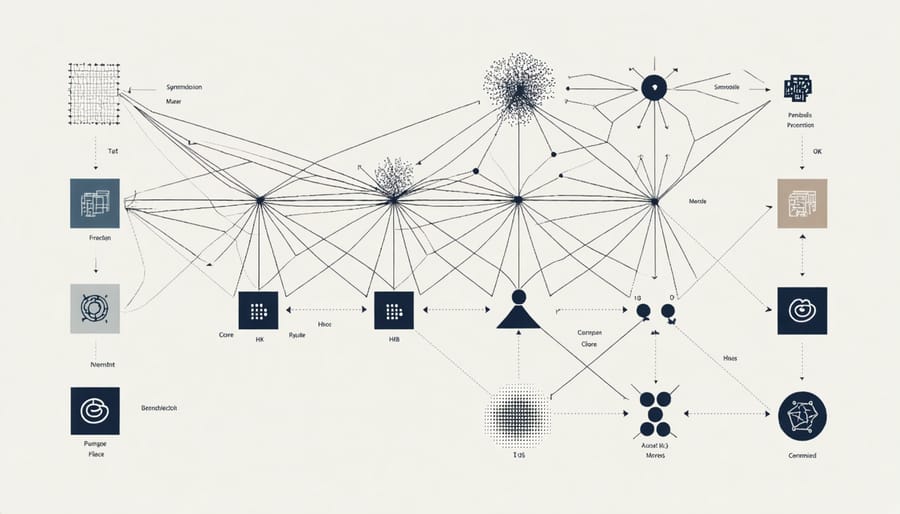

Symbolic AI, which dominated early research in artificial intelligence, is characterized by three fundamental components that define its approach to problem-solving. First, rule-based systems form the backbone of symbolic AI, using if-then statements and explicit rules to make decisions. These systems operate like a decision tree, following predefined paths based on specific conditions and inputs.

The second key characteristic is logic programming, which allows computers to make deductions based on formal logical principles. Using languages like Prolog, symbolic AI systems can perform complex reasoning tasks by breaking down problems into logical statements and relationships. This approach mirrors human reasoning processes, making it particularly effective for tasks that require clear logical steps.

Knowledge representation is the third crucial element, involving the structured organization of information in a way that computers can process. This includes semantic networks, frames, and ontologies that map relationships between concepts. For example, a symbolic AI system might represent the concept “car” as an object with properties like “has wheels,” “requires fuel,” and relationships to other concepts like “transportation” and “vehicle.”

These characteristics make symbolic AI particularly effective for tasks requiring explicit reasoning and rule following, such as expert systems in medical diagnosis or legal analysis. The approach excels in situations where problems can be clearly defined and rules can be explicitly stated.

Notable Symbolic AI Systems

Several symbolic AI systems have made significant contributions to artificial intelligence research and practical applications. ELIZA, developed in the 1960s at MIT, was one of the first natural language processing programs that could engage in text-based conversations by pattern matching and substitution. Though simple by today’s standards, it demonstrated the potential of rule-based systems in human-computer interaction.

XCON (Expert CONfigurer), developed by Digital Equipment Corporation in 1980, became one of the first commercially successful expert systems. It helped configure computer systems and saved the company millions of dollars annually by automating complex technical decisions.

MYCIN, created at Stanford in the 1970s, was a groundbreaking medical diagnostic system that could identify bacterial infections and recommend antibiotics. Despite never being used in clinical practice, its development established important principles for expert systems in healthcare.

Cyc, an ambitious project started in 1984, aims to encode common-sense knowledge in a formal logical framework. While not achieving its original goals of human-like reasoning, Cyc remains one of the largest knowledge bases of human concepts and continues to contribute to semantic understanding in AI applications.

SHRDLU, developed by Terry Winograd, demonstrated sophisticated natural language understanding in a blocks world environment. It could engage in dialogue about moving blocks and understanding spatial relationships, showcasing the potential of symbolic reasoning in constrained domains.

These systems, while limited compared to modern AI, laid crucial groundwork for today’s hybrid approaches that combine symbolic and neural methods.

Limitations That Sparked Change

The Symbol Grounding Problem

The Symbol Grounding Problem represents one of the most significant challenges in symbolic AI: how can abstract symbols used by computers be connected to their real-world meanings? Imagine teaching a computer the word “cat.” While it can process the symbol “cat” and apply logical rules to it, the computer doesn’t truly understand what a cat is in the same way humans do through sensory experiences and interactions.

This disconnect between symbols and their meanings was first formally identified by philosopher Stevan Harnad in 1990. The problem highlights a fundamental limitation of pure symbolic systems: they manipulate symbols based on syntax (rules) without understanding semantics (meaning).

To illustrate this, consider a person learning Chinese by following a rulebook for manipulating Chinese characters. They might be able to respond correctly to written questions, but they don’t actually understand Chinese. Similarly, traditional symbolic AI systems can follow programmed rules but lack genuine understanding of the concepts they manipulate.

This challenge has led researchers to explore hybrid approaches combining symbolic reasoning with sensory input processing, moving toward systems that can ground abstract symbols in real-world experiences and perceptions.

Scalability and Flexibility Issues

While symbolic AI showed early promise in controlled environments, its real-world implementation revealed significant limitations. The rigid rule-based systems struggled to handle the complexity and ambiguity of natural situations, leading to what became known as the first AI winter.

One of the main challenges is the “knowledge acquisition bottleneck” – the enormous task of manually coding all the rules and knowledge needed for a system to function effectively. As problems become more complex, the number of rules grows exponentially, making maintenance and updates increasingly difficult.

Symbolic AI systems also lack the ability to generalize from examples or adapt to new situations without explicit programming. For instance, a symbolic AI chess program that excels at standard chess might completely fail when presented with a slight variation in the rules or board setup.

These systems also struggle with handling uncertainty and incomplete information, common in real-world scenarios. While they can process precise, well-defined problems effectively, they often falter when dealing with fuzzy logic, natural language nuances, or situations requiring common-sense reasoning – capabilities that humans handle intuitively.

The Emergence of Subsymbolic AI

Neural Networks vs. Symbolic Systems

Neural networks and symbolic systems represent two fundamentally different approaches to artificial intelligence, each with its own strengths and limitations. Symbolic systems operate like a sophisticated chess player, using explicit rules and logical reasoning to make decisions. They work with symbols and relationships that humans can easily understand and interpret, making them particularly effective for tasks requiring clear reasoning and explanation.

In contrast, neural networks function more like the human brain, learning patterns from data without explicit programming. They excel at tasks like image recognition and natural language processing, where patterns are complex and difficult to define through rules alone. While symbolic systems can explain their decision-making process step by step, neural networks often operate as “black boxes,” making it challenging to understand exactly how they arrive at their conclusions.

The key distinction lies in their knowledge representation. Symbolic systems store information in a structured, human-readable format using rules and relationships. Neural networks, however, encode knowledge in the weights and connections between artificial neurons, creating distributed representations that emerge from training data.

Both approaches have their place in modern AI. Symbolic systems shine in applications requiring explicit reasoning and transparency, such as medical diagnosis systems or legal analysis tools. Neural networks dominate in areas like computer vision and speech recognition, where pattern recognition is paramount. This complementary nature has led many researchers to explore hybrid approaches that combine the best of both worlds.

Learning from Data vs. Explicit Programming

The fundamental difference between symbolic AI and modern machine learning approaches lies in how they acquire and process knowledge. Traditional symbolic AI relies on explicit programming, where human experts manually code rules, facts, and logical relationships into the system. This approach mirrors how we might write a detailed instruction manual, with every possible scenario and response carefully mapped out.

Consider a chess-playing program from the 1980s: developers would explicitly program rules about piece movement, strategic positions, and common patterns. While this worked for well-defined problems, it proved impractical for more complex, real-world situations where rules are harder to define.

Modern AI systems, in contrast, learn from data. Instead of being told exactly what to do, they discover patterns and relationships by analyzing examples. A modern chess AI learns by studying millions of chess games, identifying winning strategies and patterns that human programmers might never have considered.

This shift from explicit programming to learning from data has revolutionized AI’s capabilities. Systems can now handle tasks that were once impossible to program explicitly, like recognizing faces in different lighting conditions or understanding natural language with its many nuances and contexts. However, this doesn’t mean symbolic AI is obsolete – many modern systems combine both approaches, using data-driven learning while maintaining the interpretability and logical reasoning capabilities of symbolic systems.

Modern Hybrid Approaches

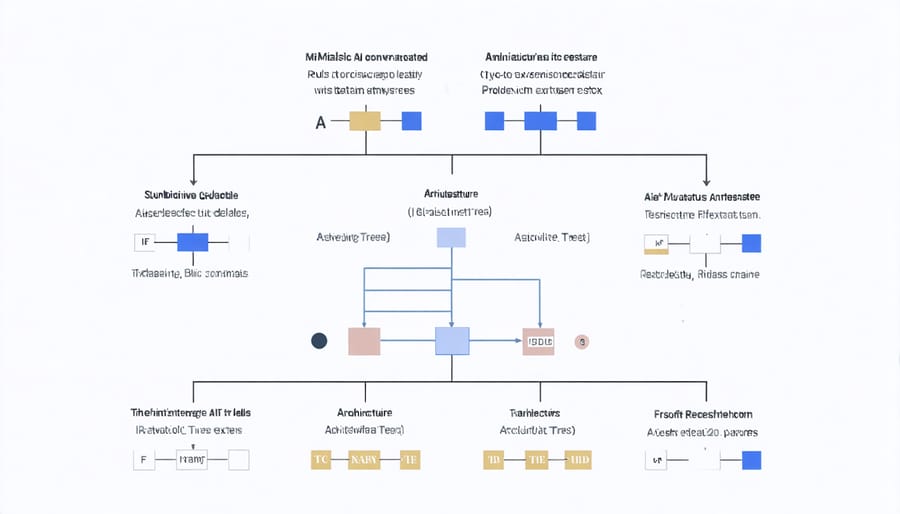

Neuro-Symbolic AI

Neuro-symbolic AI represents an innovative fusion of traditional symbolic reasoning with modern neural networks, addressing the limitations of both approaches. This hybrid methodology combines the interpretability and logical reasoning of symbolic AI with the pattern recognition and learning capabilities of neural networks.

In practice, neuro-symbolic systems might use neural networks to process raw input data, such as images or text, while employing symbolic reasoning to make logical deductions about the processed information. For example, in a medical diagnosis system, the neural network component might analyze medical images, while the symbolic component applies medical rules and guidelines to reach a final diagnosis.

This combination offers several advantages. The neural network component can handle uncertainty and learn from examples, while the symbolic component provides explainable reasoning and can incorporate human expertise. This makes neuro-symbolic AI particularly valuable in fields requiring both pattern recognition and logical reasoning, such as scientific discovery, legal analysis, and automated problem-solving.

Recent developments have shown promising results in areas like visual question answering, where systems need to understand both visual content and natural language queries. Companies are increasingly adopting neuro-symbolic approaches to create more robust and interpretable AI systems that can better handle complex real-world scenarios.

Despite its potential, challenges remain in seamlessly integrating these two paradigms. Researchers continue to explore new architectures and methodologies to better bridge the gap between neural and symbolic processing, pushing the boundaries of what AI systems can achieve.

Future Prospects

The future of symbolic AI lies in its integration with other AI approaches, particularly neural networks and machine learning systems. These hybrid systems are increasingly showing promise in combining the reasoning capabilities of symbolic AI with the pattern recognition strengths of modern machine learning. As modern AI applications become more sophisticated, the need for explainable and transparent decision-making processes grows more critical.

Researchers are exploring innovative ways to merge symbolic reasoning with deep learning, creating systems that can both learn from data and apply logical rules. This fusion could lead to AI systems that are better at handling complex reasoning tasks while maintaining the adaptability of machine learning. Areas like healthcare diagnosis, legal analysis, and autonomous vehicle decision-making could particularly benefit from these hybrid approaches.

The development of neuro-symbolic AI represents a particularly promising direction, where neural networks are enhanced with symbolic reasoning capabilities. This combination could help address current AI limitations in areas requiring common sense reasoning and causal understanding. Future applications might include more sophisticated natural language processing systems that can better understand context and nuance, improved automated planning systems, and more reliable autonomous decision-making platforms.

Looking ahead, symbolic AI principles could play a crucial role in developing more trustworthy and interpretable AI systems, addressing current concerns about AI transparency and accountability.

The journey from symbolic AI to modern artificial intelligence systems represents one of the most significant shifts in computer science history. What began as a rules-based approach to mimicking human reasoning has evolved into a rich tapestry of methodologies that combine the best of both symbolic and subsymbolic approaches. This transition wasn’t simply about abandoning one approach for another – rather, it demonstrated our growing understanding of intelligence itself.

The limitations of purely symbolic systems, such as their inability to handle uncertainty and learn from experience, led to the rise of machine learning and neural networks. However, recent developments show that the future of AI likely lies in hybrid systems that leverage both approaches. These systems can combine the logical reasoning and explainability of symbolic AI with the pattern recognition and adaptability of neural networks.

This evolution has profound implications for the future of artificial intelligence. While early symbolic AI helped us understand logical reasoning and knowledge representation, modern approaches are teaching us about learning, adaptation, and the complex nature of intelligence. As we move forward, the integration of symbolic reasoning with machine learning continues to open new possibilities, from more robust autonomous systems to AI that can better explain its decision-making process.

The lesson learned isn’t that one approach is superior, but that different aspects of intelligence require different tools. This understanding is shaping how we develop AI systems today, leading to more sophisticated and capable artificial intelligence that can better serve human needs.