From primitive calculators to self-driving cars, artificial intelligence has undergone a remarkable transformation that continues to reshape our world. The journey of AI evolution, marked by early AI breakthroughs, represents one of humanity’s most ambitious technological leaps. What began as simple pattern-matching algorithms in the 1950s has evolved into sophisticated systems capable of generating art, engaging in natural conversations, and solving complex problems that once seemed impossible for machines.

Today’s AI systems, powered by advanced neural networks and deep learning architectures, process information in ways that increasingly mirror human cognitive functions. This evolution wasn’t just about software improvements – the exponential growth in computing power, particularly through specialized hardware like GPUs and TPUs, has been instrumental in unlocking AI’s current capabilities.

As we stand at the cusp of a new era in artificial intelligence, understanding its evolutionary journey becomes crucial for anyone interested in technology’s future. From healthcare to space exploration, AI’s impact continues to expand, making its development story not just a tale of technological achievement, but a blueprint for humanity’s next great leap forward.

The GPU Revolution: When Graphics Cards Became AI Powerhouses

From Gaming to Deep Learning

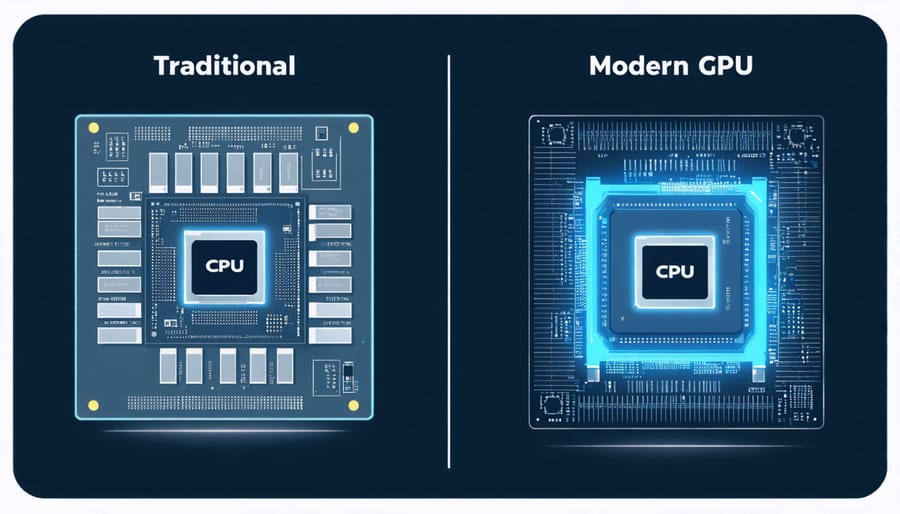

One of the most fascinating stories in AI’s evolution is how graphics cards, originally designed for rendering video game graphics, became the backbone of modern artificial intelligence. In the mid-2000s, researchers discovered that Graphics Processing Units (GPUs) were exceptionally good at performing the parallel computations needed for training neural networks.

This revelation came from GPUs’ ability to process multiple tasks simultaneously – a feature originally intended to render countless pixels in gaming environments. While CPUs excel at sequential tasks, GPUs could handle thousands of smaller calculations at once, making them perfect for the matrix operations fundamental to deep learning.

NVIDIA, a company primarily known for gaming hardware, recognized this potential and developed CUDA, a platform that allowed developers to use GPUs for general-purpose computing. This breakthrough dramatically accelerated AI training times, reducing processes that once took weeks to just days or hours.

The gaming industry’s constant push for better graphics inadvertently drove improvements in GPU technology that benefited AI development. Features like increased memory bandwidth, better floating-point precision, and more efficient parallel processing became crucial for both gaming and AI applications.

Today, specialized AI accelerators have emerged, but GPUs remain central to AI development, demonstrating how innovation in one field can unexpectedly revolutionize another. This transformation represents one of the most significant hardware developments in AI’s history, enabling the deep learning revolution we’re experiencing today.

NVIDIA’s Game-Changing Architecture

NVIDIA’s introduction of CUDA (Compute Unified Device Architecture) in 2006 marked a pivotal moment in AI development. This groundbreaking technology allowed developers to harness the parallel processing power of graphics cards for complex mathematical computations, essentially transforming gaming hardware into AI powerhouses.

Before CUDA, training neural networks was painfully slow, often taking weeks or months. NVIDIA’s architecture changed this by enabling thousands of calculations to run simultaneously, dramatically accelerating AI model training. This innovation helped researchers tackle increasingly complex problems while addressing power consumption challenges through optimized processing.

The company later introduced specialized AI processors like the Tesla and A100 GPUs, specifically designed for machine learning tasks. These processors featured tensor cores, specialized circuits that excel at the matrix multiplication operations crucial for deep learning. This hardware optimization delivered up to 20 times faster training speeds compared to traditional CPUs.

NVIDIA’s commitment to AI development extends beyond hardware. Their CUDA toolkit and libraries, like cuDNN, provide developers with essential tools for building and optimizing AI applications. This comprehensive ecosystem has become the de facto standard for AI research and development, powering everything from autonomous vehicles to medical imaging analysis.

Memory and Storage: Breaking AI’s Speed Barriers

High-Bandwidth Memory

High-Bandwidth Memory (HBM) represents a revolutionary leap in AI hardware that has dramatically accelerated modern machine learning capabilities. This innovative memory technology stacks multiple layers of DRAM vertically, creating a compact package that sits much closer to the processor than traditional memory configurations.

Think of HBM as a multi-story library where all the books (data) are arranged vertically instead of spread across a vast floor. This vertical arrangement allows AI systems to access huge amounts of data much faster, similar to having all reference materials within arm’s reach rather than scattered across different rooms.

The impact of HBM on AI training has been remarkable. Training times for large language models have been reduced from weeks to days, while real-time inference has become more practical for complex applications. For example, image recognition systems that once took seconds to process a single frame can now analyze multiple frames per second, enabling practical applications like autonomous driving and real-time video analysis.

Recent generations of HBM, such as HBM2E and HBM3, have pushed bandwidth capabilities beyond 2 terabytes per second, allowing AI models to process massive datasets more efficiently than ever before. This advancement has been crucial in enabling the development of increasingly sophisticated AI models while keeping power consumption and physical footprint manageable.

SSD and NVMe Innovations

The evolution of AI has been significantly influenced by advancements in storage technology, particularly Solid State Drives (SSDs) and NVMe (Non-Volatile Memory Express) solutions. These storage innovations have addressed a critical bottleneck in AI development: the need to quickly access and process massive datasets.

Traditional hard drives, with their mechanical parts and slower read/write speeds, simply couldn’t keep pace with the demanding requirements of AI training. The introduction of SSDs marked a revolutionary change, offering data access speeds up to 100 times faster than conventional hard drives. This breakthrough meant AI models could be trained more efficiently, with reduced waiting times for data loading and processing.

NVMe technology took this advancement even further by creating a direct pathway between storage and the CPU through the PCIe interface. This direct connection eliminated the bottlenecks associated with older storage protocols, enabling data transfer speeds of up to 7,000 MB/s in modern NVMe drives – a game-changing improvement for AI applications.

These storage innovations have been particularly crucial for deep learning algorithms, which often need to process terabytes of training data. Fast storage solutions have made it possible to implement more complex AI models, handle larger datasets, and achieve faster training times, ultimately contributing to the rapid advancement of AI capabilities we see today.

Custom AI Chips: Purpose-Built for Intelligence

TPUs and Neural Processors

As AI workloads became more demanding, even powerful GPUs weren’t enough to handle the massive computational needs of advanced neural networks. In 2016, Google introduced their Tensor Processing Units (TPUs), custom-built chips specifically designed for machine learning tasks. These specialized processors revolutionized AI computing by optimizing the specific mathematical operations common in neural networks, particularly matrix multiplication and convolution operations.

TPUs proved to be game-changers, offering 15-30x higher performance and 30-80x higher performance-per-watt compared to contemporary GPUs and CPUs. This efficiency enabled Google to power services like Google Search, Google Translate, and Google Photos with advanced AI capabilities while keeping energy costs manageable.

Following Google’s lead, other tech giants developed their own neural processors. Apple introduced its Neural Engine in the A11 Bionic chip, bringing AI capabilities to mobile devices. Meta (formerly Facebook) created its AI-optimized hardware for data centers, while Amazon developed the AWS Inferentia chip for cloud-based AI applications.

These custom processors marked a significant shift in AI hardware evolution, moving from general-purpose computing to specialized architectures. This transition enabled more complex AI models to run efficiently, paving the way for breakthrough applications like BERT for natural language processing and real-time computer vision systems. Today, neural processors continue to evolve, with each new generation bringing improved performance and energy efficiency.

Edge AI Hardware

The rise of edge AI hardware represents one of the most significant advancements in artificial intelligence deployment. Unlike traditional AI systems that rely on cloud computing, these specialized chips bring AI capabilities directly to mobile phones, smart devices, and IoT sensors, enabling real-time processing without internet connectivity.

Leading this revolution are neural processing units (NPUs) and application-specific integrated circuits (ASICs) designed specifically for AI tasks. Apple’s Neural Engine, found in iPhones and iPads, can perform billions of operations per second while consuming minimal power. Similarly, Google’s Edge TPU and Qualcomm’s Snapdragon Neural Processing Engine bring advanced AI capabilities to Android devices and IoT applications.

These specialized chips excel at tasks like voice recognition, image processing, and natural language understanding right on the device. This local processing not only improves response times but also enhances privacy by keeping sensitive data on the device rather than sending it to remote servers.

The impact extends beyond smartphones. Smart home devices, security cameras, and industrial sensors now incorporate AI chips that can make intelligent decisions instantly. For instance, modern doorbell cameras can distinguish between people, animals, and vehicles without cloud assistance, while factory sensors can detect equipment failures in real-time.

As these chips become more powerful and energy-efficient, we’re seeing AI capabilities expand into even smaller devices, paving the way for truly intelligent everyday objects.

The Future of AI Hardware

Quantum Computing Promise

Quantum computing stands poised to revolutionize artificial intelligence by solving complex problems that classical computers find nearly impossible to tackle. Unlike traditional bits that exist in either 0 or 1 states, quantum bits (qubits) can exist in multiple states simultaneously through a phenomenon called superposition, enabling unprecedented parallel processing capabilities.

This quantum advantage could dramatically accelerate machine learning algorithms, particularly in areas like optimization, pattern recognition, and deep learning. For instance, training large language models that currently require months of processing time might be completed in mere hours or minutes with quantum computers.

As global AI hardware innovation continues to advance, researchers are exploring quantum neural networks and quantum machine learning algorithms that could process vast amounts of data more efficiently than ever before. These developments could unlock new possibilities in drug discovery, climate modeling, and financial analysis.

However, significant challenges remain. Quantum computers currently struggle with maintaining qubit stability and require extremely low temperatures to operate. Despite these obstacles, tech giants and startups alike are investing heavily in quantum AI research, recognizing its potential to trigger the next major leap in artificial intelligence capabilities.

When quantum computers become practically viable, they could enable AI systems to solve previously intractable problems and process information in ways that mirror the complexity of human thought more closely than current technologies allow.

Neuromorphic Computing

Imagine a computer that thinks like a human brain – that’s the fascinating premise behind neuromorphic computing. This innovative approach to AI hardware design takes inspiration from the structure and function of biological neural networks, creating chips that process information more like our brains do than traditional computers.

Unlike conventional processors that separate memory and computing, neuromorphic chips integrate both functions, similar to how neurons and synapses work together in our brains. This design allows for more efficient processing of AI tasks while consuming significantly less power. Companies like Intel, with their Loihi chip, and IBM, with their TrueNorth processor, are leading the charge in this revolutionary field.

The advantages of neuromorphic computing are compelling. These systems can learn from their environment in real-time, adapt to new situations, and operate with remarkable energy efficiency. For example, a neuromorphic chip can process visual information for robotics using just a fraction of the power needed by traditional processors.

Looking ahead, neuromorphic computing could revolutionize AI applications in edge devices, autonomous vehicles, and smart sensors. Imagine smartphones that can process complex AI tasks without draining their batteries, or robots that can learn and adapt to new environments as naturally as humans do. While still in its early stages, this brain-inspired technology represents a promising direction for the future of AI hardware.

The remarkable evolution of AI has been inextricably linked to advances in computing hardware, demonstrating how crucial technological infrastructure is to artificial intelligence progress. From the early adoption of GPUs that accelerated neural network training to today’s specialized AI processors, hardware improvements have consistently unlocked new possibilities in AI capabilities.

The exponential growth in processing power, memory capacity, and energy efficiency has enabled AI systems to tackle increasingly complex tasks that were once thought impossible. Today’s AI hardware can process massive datasets and run sophisticated algorithms at speeds that would have been unimaginable just a decade ago, making applications like real-time natural language processing and computer vision practical realities.

Looking ahead, emerging technologies like quantum computing, neuromorphic chips, and advanced semiconductor designs promise to push AI capabilities even further. These innovations could potentially solve current limitations in processing power and energy consumption, opening doors to more sophisticated AI applications.

However, hardware development remains both a catalyst and a constraint for AI advancement. While we continue to push the boundaries of what’s possible, the future of AI will largely depend on our ability to create more powerful, efficient, and specialized computing solutions. As we stand on the cusp of new hardware breakthroughs, it’s clear that the next chapter in AI’s evolution will be written in silicon as much as in software.