The warehouse robot glides past human workers, its cameras constantly scanning, artificial intelligence making split-second decisions about which package to grab next. This scene, once pure science fiction, now unfolds in thousands of facilities worldwide where robotics and AI have merged into something greater than either technology alone.

Robotics gives AI hands, wheels, and wings to interact with the physical world. AI gives robots eyes, ears, and brains to make sense of their surroundings. Together, they create machines that don’t just follow pre-programmed instructions but adapt, learn, and respond to changing environments in real time.

The integration happens at multiple layers. Computer vision algorithms process camera feeds so robots can identify objects. Machine learning models predict optimal movement paths. Natural language processing enables voice commands. Sensor fusion combines data from multiple sources to build comprehensive environmental awareness. These aren’t separate systems bolted together; they form an interconnected hardware-software ecosystem where each component enhances the others.

From surgical robots that assist in delicate procedures to autonomous vehicles navigating busy streets, from agricultural drones monitoring crop health to robotic assistants helping elderly individuals maintain independence, AI-integrated robotics is reshaping industries and daily life. The technology faces real challenges around safety, reliability, and ethical deployment, but the trajectory is clear: intelligent machines are moving from controlled factory floors into complex, unpredictable human environments, learning to work alongside us rather than simply for us.

What Makes AI-Integrated Robotics Different From Traditional Robots

Think of traditional robots as meticulous recipe followers. They execute pre-programmed instructions with precision, repeating the same movements in exactly the same way, every single time. If you move an object even slightly out of position, a traditional robot won’t adapt—it’ll continue its programmed routine, potentially missing the target entirely or causing errors.

AI-integrated robots, on the other hand, are more like experienced chefs who understand cooking principles and can adjust on the fly. They learn from experience, recognize patterns, and make decisions based on their environment. This fundamental shift from rigid programming to adaptive intelligence represents the core difference between the two approaches.

The magic happens through three key capabilities working together. Machine learning allows robots to improve their performance over time by analyzing data from previous tasks. Instead of requiring a programmer to anticipate every possible scenario, these systems identify patterns and develop strategies independently. A warehouse robot, for example, can learn the most efficient routes by analyzing thousands of delivery runs.

Computer vision serves as the robot’s eyes, enabling it to interpret visual information much like humans do. Using cameras and algorithms, AI-integrated robots can identify objects, read text, detect defects in manufacturing, or navigate around obstacles. This transforms robots from blind machines following coordinates into seeing entities that understand their surroundings.

Sensor fusion brings everything together by combining data from multiple sources—cameras, touch sensors, microphones, and motion detectors—to create a comprehensive understanding of the environment. Think of it as giving robots multiple senses that work in harmony, just like how you use sight, touch, and hearing simultaneously to interact with the world.

The practical impact is substantial. While traditional robots excel in controlled environments performing repetitive tasks, AI-integrated systems thrive in dynamic, unpredictable settings where adaptation matters more than perfection.

The Three Core Components Working Together

Sensors: The Robot’s Eyes, Ears, and Skin

Robots perceive their surroundings through an array of sophisticated sensors that function like artificial eyes, ears, and skin. These AI sensors collect environmental data that feeds directly into decision-making algorithms, enabling machines to understand and respond to their world.

Cameras serve as the primary visual sensors, capturing images that computer vision algorithms process to identify objects, people, and obstacles. LIDAR (Light Detection and Ranging) goes further by bouncing laser beams off surfaces to create precise 3D maps of surroundings, measuring distances with remarkable accuracy. You’ll find LIDAR atop autonomous vehicles, constantly scanning roads to detect everything from pedestrians to potholes.

Tactile sensors give robots a sense of touch, allowing collaborative robots in factories to detect when they encounter unexpected resistance and immediately stop to prevent accidents. These force-sensitive systems enable robots to handle delicate objects like eggs or work safely alongside human colleagues without protective barriers.

Inertial Measurement Units (IMUs) combine accelerometers and gyroscopes to track movement and orientation, helping drones maintain stable flight and delivery robots navigate uneven terrain. Together, these sensors create a comprehensive sensory system that transforms raw data into actionable intelligence, bridging the gap between physical hardware and artificial intelligence.

Processing Power: The Brain Behind the Brawn

A robot’s intelligence is only as good as its ability to think quickly. This is where specialized processing hardware becomes critical. Think of it like this: a self-driving car can’t wait several seconds to decide whether to brake for a pedestrian—it needs to process visual data and make decisions in milliseconds.

Modern AI-powered robots rely on specialized chips designed specifically for artificial intelligence workloads. Graphics Processing Units (GPUs) excel at handling the parallel computations needed for neural networks, while Tensor Processing Units (TPUs) are custom-built by companies like Google to accelerate machine learning tasks even further. These processors can handle thousands of calculations simultaneously, making real-time decision-making possible.

But here’s where location matters: should the robot process data on-board or send it to the cloud? Cloud computing offers massive processing power and storage, perfect for training complex AI models. However, it introduces latency—the delay while data travels back and forth. For time-sensitive operations like robotic surgery or autonomous navigation, edge computing processors built directly into the robot handle computations locally, eliminating dangerous delays.

Many modern robots use a hybrid approach: edge processors for split-second decisions, cloud connectivity for learning and updates. This combination delivers both speed and intelligence, ensuring robots can react instantly while continuously improving their capabilities.

Actuators and Control Systems: Turning Thoughts Into Actions

Once AI algorithms decide what action a robot should take, actuators become the crucial bridge between digital intelligence and physical movement. Think of actuators as the robot’s muscles—they convert electrical signals from the control system into actual motion.

Motors and servos are the most common actuators you’ll encounter. Standard motors provide continuous rotation for wheels or conveyor systems, while servo motors offer precise angular control, perfect for robotic arms that need to stop at exact positions. Consider a warehouse robot picking packages: its AI vision system identifies the item, calculates the optimal grip angle, then sends commands to servo motors that position the arm with millimeter accuracy.

The control system acts as the robot’s nervous system, coordinating these movements. It receives high-level instructions from the AI (“pick up box”) and translates them into specific motor commands (rotate joint one 45 degrees, extend joint two 12 centimeters). Advanced systems use feedback loops, constantly checking sensor data to adjust movements in real-time.

Precision requirements vary dramatically by application. A surgical robot performing delicate procedures needs sub-millimeter accuracy, while an agricultural robot harvesting crops can tolerate centimeter-level variance. Self-driving cars require their steering actuators to respond within milliseconds to AI navigation decisions, balancing smooth passenger comfort with split-second safety maneuvers. This marriage of intelligent decision-making and mechanical precision defines modern robotics.

Real-World Applications Transforming Industries

Manufacturing and Logistics

In factories and warehouses worldwide, a quiet revolution is transforming how products move from assembly line to shipping dock. Collaborative robots, affectionately called “cobots,” now work side-by-side with human workers on manufacturing floors. Unlike their predecessors behind safety cages, these AI-powered assistants can sense human presence and adjust their movements accordingly. At BMW’s assembly plants, cobots help workers install door seals by recognizing variations in car models and adapting their grip pressure in real-time.

Warehouse logistics has evolved dramatically with smart robots that continuously learn and optimize their routes. Amazon’s fulfillment centers deploy thousands of mobile robots that navigate dynamic environments, avoiding collisions while calculating the most efficient paths to retrieve items. These systems use machine learning to predict peak demand periods and reposition inventory proactively.

Adaptive assembly systems represent another breakthrough. In electronics manufacturing, AI-guided robots can switch between different product configurations without extensive reprogramming. They use computer vision to identify components and adjust assembly sequences on the fly, dramatically reducing changeover time when production shifts from one product to another. This flexibility means manufacturers can handle smaller batch sizes economically, responding faster to market demands while maintaining quality standards that exceed human consistency.

Healthcare and Surgery

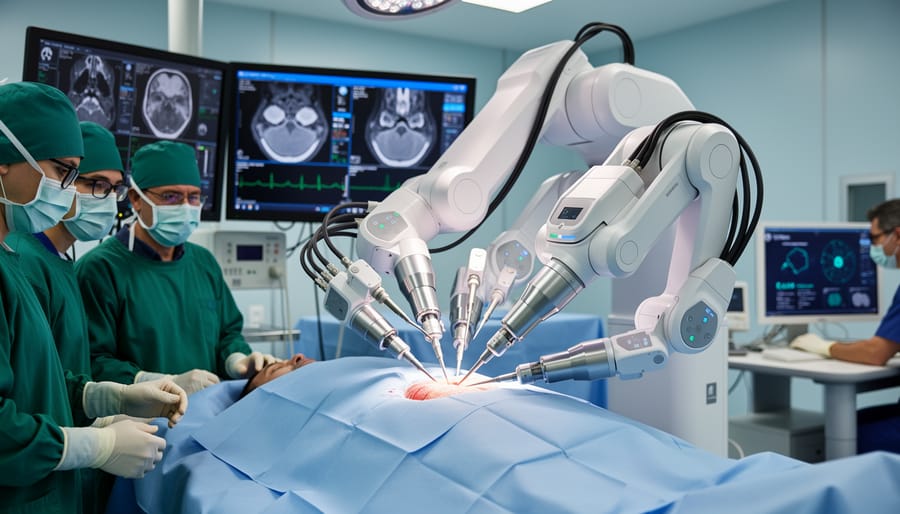

The fusion of robotics and AI is revolutionizing healthcare by bringing unprecedented precision and personalization to patient care. Consider the da Vinci Surgical System, which combines robotic arms with AI-enhanced vision to help surgeons perform minimally invasive procedures. The AI component filters out hand tremors and provides magnified 3D views, allowing surgeons to operate with millimeter-level accuracy. This translates to smaller incisions, reduced blood loss, and faster patient recovery times.

Beyond the operating room, rehabilitation robots are transforming physical therapy. Devices like Ekso Bionics’ exoskeletons use AI algorithms to analyze patient movement patterns in real-time, adjusting resistance and support levels to match individual recovery progress. The system learns from each session, creating personalized therapy plans that adapt as patients regain strength and mobility.

Diagnostic capabilities have also leaped forward with AI-powered robotic systems. Autonomous robots now navigate hospital corridors delivering medications while simultaneously monitoring patient vitals. Meanwhile, AI-enhanced imaging systems analyze medical scans faster than human radiologists, flagging potential issues like tumors or fractures with remarkable accuracy. These systems don’t replace healthcare professionals but rather augment their capabilities, allowing doctors to focus on patient interaction while AI handles data-intensive analysis tasks.

Agriculture and Environmental Monitoring

Modern farms are increasingly becoming high-tech operations where robots and AI work alongside human farmers to boost efficiency and sustainability. Agricultural robots equipped with computer vision can distinguish between crops and weeds with remarkable accuracy, enabling precise herbicide application that reduces chemical use by up to 90%. This targeted approach benefits both the environment and farm economics.

Autonomous drones have revolutionized crop monitoring by flying over fields and using AI vision systems to detect early signs of disease, pest infestations, or nutrient deficiencies. These aerial scouts analyze plant health through multispectral imaging, identifying problems invisible to the human eye and allowing farmers to intervene before issues spread.

Harvesting robots represent another breakthrough, using AI to determine ripeness and pick delicate fruits like strawberries without bruising. These machines work around the clock during peak season, addressing labor shortages while ensuring consistent quality. The technology is particularly valuable for crops requiring careful handling, where traditional mechanical harvesters would cause damage.

Home and Service Robotics

The robotics revolution has quietly entered our homes, transforming everyday life in remarkable ways. What began with simple robotic vacuum cleaners like the Roomba has evolved into sophisticated AI-powered assistants that genuinely learn and adapt to our needs.

Modern home robots go far beyond scheduled cleaning. Today’s smart home companions use computer vision to identify obstacles, machine learning to map efficient routes, and natural language processing to respond to voice commands. For example, newer vacuum models remember which rooms need more frequent attention and adjust their schedules accordingly, while some can distinguish between pet accidents and spilled drinks, cleaning each appropriately.

The most exciting developments are happening in elder care robotics. Companion robots can now monitor daily routines, detect falls, remind seniors about medications, and alert caregivers to unusual behavior patterns that might indicate health concerns. These systems learn individual preferences over time, such as preferred meal times or exercise routines, becoming more helpful the longer they operate in a household. This adaptive intelligence represents a significant leap from pre-programmed devices to truly responsive assistants that enhance independence and safety for aging populations.

The Challenges Engineers Are Solving Right Now

Power and Energy Efficiency

One of the biggest headaches for mobile robots running AI is power consumption. Think of it this way: your smartphone battery drains quickly when using GPS and camera apps simultaneously. Now imagine a robot constantly processing video streams, making split-second decisions, and controlling multiple motors—all while navigating unpredictable environments. Traditional processors gulp energy when running complex AI models, limiting how long robots can operate between charges.

Current solutions involve a balancing act. Engineers often use cloud computing, where robots send data to remote servers for heavy processing, reducing onboard power needs. Others optimize AI models to be “lighter,” sacrificing some accuracy for efficiency. Some robots carry larger battery packs, but this adds weight and reduces agility.

The real game-changer lies in neuromorphic chips—processors designed to mimic how our brains work. These specialized chips can run AI algorithms using a fraction of the power traditional processors require, potentially extending robot operating time from hours to days. Companies are also exploring energy harvesting technologies, where robots capture energy from their surroundings through solar panels or kinetic motion.

Safety and Reliability

Ensuring AI robots operate safely in real-world settings requires multiple layers of protection. Engineers implement fail-safe mechanisms that act like emergency brakes, immediately stopping robots when sensors detect unexpected obstacles or behaviors. For example, collaborative robots in manufacturing facilities use force-limiting sensors that halt movement the instant they encounter unexpected resistance, preventing workplace injuries.

Testing these systems presents unique challenges because robots must handle scenarios engineers can’t always predict. Companies use simulation environments to expose AI robots to thousands of virtual situations, from crowded warehouses to unpredictable weather conditions. Amazon’s warehouse robots, for instance, undergo extensive testing where programmers introduce random variables to see how the AI adapts.

Redundancy is another critical safety feature. Autonomous delivery robots typically have backup sensors and dual processing systems, so if one component fails, another takes over. Engineers also program “safety envelopes” that define boundaries the robot cannot cross, regardless of what its AI decides.

Regular monitoring and continuous learning help identify potential issues before they become dangerous. Many AI robots send performance data to central systems, allowing teams to spot unusual patterns and update safety protocols remotely, ensuring reliable operation as these machines encounter new challenges.

Cost and Accessibility

The price tag for AI-powered robots has traditionally kept them out of reach for smaller businesses and educational institutions. Industrial robotic arms with advanced AI capabilities can cost anywhere from $50,000 to over $250,000, while sophisticated humanoid robots reach into the millions. This financial barrier has historically limited innovation to well-funded corporations and research labs.

However, the landscape is shifting dramatically. The rise of open-source AI frameworks like TensorFlow and PyTorch has eliminated expensive licensing fees, allowing developers to build sophisticated intelligence systems at minimal cost. Meanwhile, manufacturing advances have reduced hardware prices—sensors that cost thousands just five years ago now sell for under $100.

Companies like Universal Robots and startups focused on collaborative robots are introducing affordable options starting around $20,000. Educational robotics kits powered by AI now cost less than $500, making hands-on learning accessible to schools and hobbyists. Cloud-based AI services further reduce entry barriers by eliminating the need for expensive on-site computing infrastructure, allowing users to pay only for what they use and scale as needed.

What’s Coming Next: The Near-Future of Intelligent Robots

The next few years promise exciting developments in intelligent robotics, with several breakthroughs already moving from research labs to real-world prototypes. These emerging technologies aren’t science fiction—they’re grounded in current research and approaching practical deployment.

One of the most significant advances is in tactile sensing technology. While robots today can see and navigate their environments, many struggle with the delicate touch needed for complex tasks. New sensor arrays mimicking human skin can now detect pressure, texture, and temperature simultaneously. Companies like Meta and startups such as GelSight are developing fingertip sensors that give robots the sensitivity to handle fragile objects like eggs or strawberries without crushing them. This means robots could soon assist in delicate assembly work, surgical procedures, or even elderly care with a genuinely gentle touch.

Brain-inspired computing architectures, called neuromorphic chips, represent another leap forward. Unlike traditional processors that handle one calculation at a time, these chips process information more like our brains—simultaneously and with remarkable energy efficiency. Intel’s Loihi and IBM’s TrueNorth chips are already enabling robots to make faster decisions while consuming far less power. Imagine warehouse robots that can work entire shifts without recharging or drones that fly longer missions.

Swarm robotics is transforming how we think about automation entirely. Rather than building one sophisticated robot, researchers are creating teams of simpler robots that collaborate like ant colonies. At Harvard’s Wyss Institute, swarms of tiny robots already coordinate to build structures and transport objects together. This approach could revolutionize disaster response, with dozens of small robots searching collapsed buildings faster than any single machine.

Finally, human-robot collaboration interfaces are becoming remarkably intuitive. New systems use natural gestures, voice commands, and even brain-computer interfaces to make working alongside robots feel seamless rather than complicated. These advances promise a future where humans and machines truly work as partners.

We’ve journeyed through the fascinating world where artificial intelligence meets physical robotics, witnessing a transformation that goes far beyond simple automation. AI-integrated robotics represents a fundamental shift in how machines interact with our world—moving from pre-programmed tools that follow rigid scripts to adaptive systems that learn, perceive, and respond to their environments in real-time.

Think back to those factory robots welding car frames in exactly the same spot, day after day. Now imagine collaborative robots working alongside humans, adjusting their movements based on what they see and sense, learning from each interaction. That’s the leap we’re witnessing. From warehouse robots navigating dynamic spaces to surgical systems assisting with delicate procedures, AI hasn’t just made robots smarter—it’s made them truly useful partners in solving complex, real-world challenges.

Of course, this technology brings important questions about safety, ethics, and accessibility that require thoughtful consideration as development continues. The potential, however, remains extraordinary. Whether you’re a student exploring career paths, a professional looking to stay current, or simply someone fascinated by how technology shapes our future, there’s never been a more exciting time to engage with these innovations.

Ready to dive deeper? Explore Ask Alice for comprehensive guides on machine learning algorithms, computer vision techniques, and emerging AI trends. The future of robotics and AI is being written now—and understanding it starts with curiosity and continued learning.