As artificial intelligence continues to reshape our world at an unprecedented pace, the ethical implications of AI development have moved from theoretical discussions to urgent, real-world challenges. From autonomous vehicles making split-second moral decisions to AI-powered surveillance systems raising privacy concerns, we’re navigating complex ethical territories that demand immediate attention and thoughtful solutions.

The intersection of AI capability and ethical responsibility has become a critical battleground where innovation meets human values. Recent incidents, like biased hiring algorithms and facial recognition controversies, have highlighted how AI systems can inadvertently perpetuate societal inequalities or infringe on fundamental human rights. These challenges are not merely technical problems to be solved, but profound ethical dilemmas that question the very nature of human-machine interaction and accountability.

Today’s AI ethics landscape encompasses issues ranging from data privacy and algorithmic bias to transparency and accountability in AI decision-making. As we entrust increasingly sophisticated AI systems with more consequential decisions – from medical diagnoses to criminal justice assessments – the stakes for getting ethics right have never been higher. Organizations, governments, and technology leaders are grappling with establishing frameworks that ensure AI development serves humanity’s best interests while promoting innovation.

This exploration of AI ethics issues reveals not just the challenges we face, but also the unprecedented opportunity to shape the future relationship between human society and artificial intelligence. Understanding these ethical dimensions is crucial for anyone involved in developing, deploying, or interacting with AI systems – which, in today’s world, means virtually everyone.

The Historical Journey of AI Ethics

Early Ethical Frameworks

The foundation of AI ethics can be traced back to the earliest days of artificial intelligence development. As early AI breakthroughs began to emerge, scientists and writers quickly recognized the need for ethical guidelines to govern these powerful new technologies.

Isaac Asimov’s Three Laws of Robotics, introduced in his 1942 short story “Runaround,” became the first widely recognized framework for AI ethics. These laws stated that robots must not harm humans, must obey human orders, and must protect their own existence without conflicting with the first two laws. While originally conceived as fiction, these principles have significantly influenced modern AI ethics discussions.

The 1956 Dartmouth Conference, which marked the birth of artificial intelligence as a field, also sparked initial conversations about the ethical implications of creating machines that could think. Early computer scientists like Norbert Wiener emphasized the importance of building safeguards into AI systems, warning about potential unintended consequences.

These early frameworks, though relatively simple by today’s standards, established crucial foundational principles that continue to shape contemporary AI ethics debates. They highlighted key concerns about human safety, machine autonomy, and the balance between technological advancement and ethical constraints – themes that remain central to current discussions about AI development and deployment.

Emergence of Modern AI Ethics

The dawn of the 21st century marked a significant shift in AI ethics, transforming theoretical discussions into pressing practical concerns. As the evolution of AI systems accelerated rapidly, society faced unprecedented challenges that demanded immediate attention.

The emergence of sophisticated machine learning algorithms, autonomous vehicles, and AI-powered decision-making systems in healthcare and criminal justice brought ethical considerations from academic discourse into everyday life. Companies like Google, Facebook, and Microsoft established their AI ethics boards between 2014 and 2019, acknowledging the need for ethical frameworks in commercial AI development.

Key turning points included the Cambridge Analytica scandal in 2018, which highlighted privacy concerns, and the growing awareness of algorithmic bias in facial recognition systems. These real-world incidents demonstrated how AI ethics directly impacts human lives and social justice.

The period also saw the formation of influential organizations like the Partnership on AI (2016) and the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. These bodies helped establish practical guidelines for ethical AI development, moving beyond philosophical debates to actionable principles.

Today, modern AI ethics focuses on immediate challenges like data privacy, algorithmic fairness, transparency, and accountability. The field continues to evolve, balancing technological advancement with human rights and social responsibility.

Core Ethical Challenges in Modern AI

Bias and Fairness

Algorithmic bias represents one of the most pressing challenges in AI ethics today, as AI systems can inadvertently perpetuate and amplify existing societal prejudices. This occurs when machine learning models are trained on historical data that contains inherent biases, leading to discriminatory outcomes in areas like hiring, lending, and criminal justice.

For example, facial recognition systems have shown significantly lower accuracy rates for people of color and women, leading to concerns about their deployment in law enforcement and security applications. Similarly, AI-powered recruitment tools have demonstrated gender bias, often favoring male candidates over equally qualified female applicants due to historical hiring patterns.

The impact of these biases extends beyond individual cases, potentially creating systemic discrimination at scale. When AI systems make decisions about loan approvals, healthcare access, or insurance rates, biased algorithms can perpetuate existing social inequalities and create new forms of digital discrimination.

To address these challenges, organizations and researchers are developing various approaches:

– Diverse and representative training data sets

– Regular algorithmic audits for bias detection

– Implementation of fairness metrics in AI development

– Cross-functional teams including ethicists and social scientists

– Transparent documentation of AI system limitations and potential biases

The pursuit of fairness in AI systems isn’t just about technical solutions; it requires a deep understanding of social context and careful consideration of how different demographic groups might be affected by AI decisions. Companies must prioritize ethical considerations during the entire AI development lifecycle, from conception to deployment, ensuring their systems promote equality rather than reinforce discrimination.

Privacy and Data Rights

As AI continues reshaping our digital world, privacy and data rights have emerged as critical concerns. AI systems require vast amounts of personal data to function effectively, raising questions about data collection, storage, and usage practices.

One of the primary challenges is informed consent. Many users unknowingly share personal information through various digital platforms, which AI systems then process and analyze. This data can include everything from browsing habits and location data to health information and financial records. The question becomes: how can we ensure meaningful consent when many users don’t fully understand how their data will be used?

Data security presents another significant challenge. As AI systems become more sophisticated, they create detailed profiles of individuals by combining data from multiple sources. This raises concerns about potential data breaches, identity theft, and unauthorized access to sensitive information. The impact of such breaches can be far-reaching, affecting individuals’ financial security, personal relationships, and professional lives.

The right to be forgotten has also become increasingly important. Users should have the ability to request the deletion of their personal data from AI systems, but this becomes complicated when the data has already been used to train AI models. How can we ensure genuine data deletion when the information has already influenced the AI’s learning patterns?

These privacy concerns are further complicated by varying international regulations and standards. While some regions have implemented strict data protection laws like GDPR, others lag behind, creating inconsistencies in how personal data is handled across borders. This presents challenges for both users seeking to protect their privacy and organizations developing AI systems.

Transparency and Accountability

One of the most pressing challenges in modern AI development is the “black box” problem – where AI systems make decisions through processes that are often too complex for humans to understand. This lack of transparency raises serious ethical concerns, particularly when these systems are used in critical decision-making scenarios like healthcare diagnostics, criminal justice, or financial lending.

Imagine a scenario where an AI system denies someone a loan, but neither the bank nor the developers can explain exactly why. This situation isn’t hypothetical; it happens regularly and highlights why transparency in AI systems is crucial. Without clear explanations for AI decisions, it becomes impossible to ensure fairness, detect bias, or correct errors in the system’s reasoning.

The push for explainable AI (XAI) has emerged as a response to this challenge. XAI aims to create systems that can provide clear, understandable explanations for their decisions while maintaining high performance levels. This involves developing techniques that allow AI systems to show their “work” – similar to how a student might show their mathematical calculations rather than just providing the final answer.

Several approaches are being developed to address this transparency issue. Some involve creating simpler models that are inherently more interpretable, while others focus on developing tools that can analyze and explain the decisions of complex systems after the fact. Organizations are also implementing accountability frameworks that require regular audits of AI systems and mandate clear documentation of decision-making processes.

However, achieving true transparency while maintaining system performance remains a delicate balance. Too much simplification might reduce an AI system’s effectiveness, while too much complexity makes it impossible for humans to meaningfully oversee the technology. Finding this balance is essential for building trust in AI systems and ensuring they serve society’s best interests.

Real-World Impact and Case Studies

Healthcare AI Dilemmas

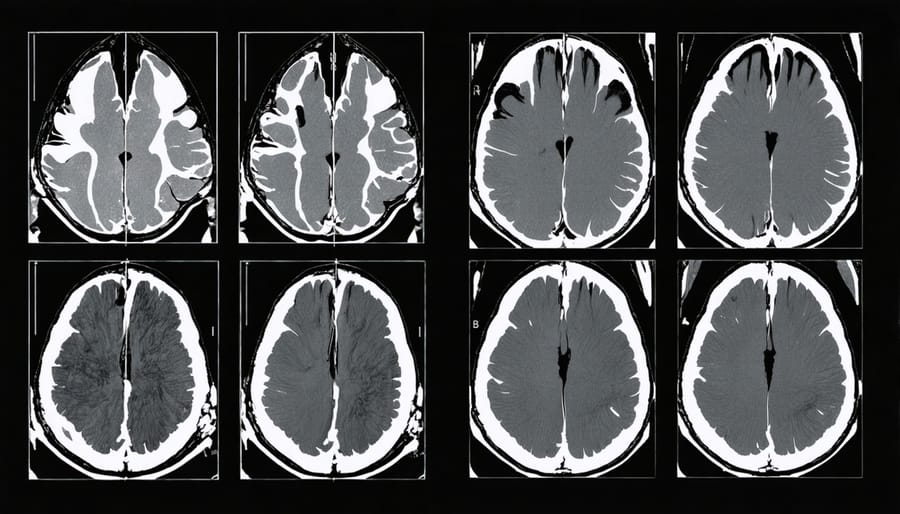

Healthcare AI presents some of the most complex ethical challenges in modern medicine, as these systems increasingly influence life-critical decisions. The integration of AI in medical diagnosis, treatment planning, and patient care brings both promising opportunities and serious ethical concerns that healthcare providers must carefully navigate.

One primary concern is the accuracy and reliability of AI diagnostic systems. While many AI tools show impressive accuracy rates in detecting conditions like cancer or heart disease, false positives or negatives can have devastating consequences. This raises questions about who bears responsibility when AI-assisted diagnoses lead to incorrect treatment decisions – the healthcare provider, the AI developer, or the institution implementing the system?

Patient privacy and data protection present another crucial challenge. AI systems require vast amounts of medical data to function effectively, but this creates significant privacy risks. Healthcare providers must balance the potential benefits of AI-driven insights against the need to protect sensitive patient information, especially as data sharing becomes more common across healthcare networks.

The issue of bias in healthcare AI is particularly concerning. Historical medical data often reflects existing societal inequalities and biases in healthcare access and treatment. When AI systems learn from this data, they risk perpetuating or even amplifying these biases, potentially leading to discriminatory care recommendations for certain demographic groups.

Transparency and explainability also pose significant challenges. Healthcare providers need to understand how AI systems arrive at their recommendations to make informed decisions, but many advanced AI algorithms operate as “black boxes,” making it difficult to trace their decision-making processes. This lack of transparency can affect patient trust and complicate informed consent procedures.

The human element in healthcare raises additional ethical questions. While AI can process vast amounts of medical data quickly, it may miss subtle patient cues that human healthcare providers pick up through experience and intuition. Finding the right balance between AI assistance and human judgment remains a critical challenge in maintaining quality patient care.

AI in Criminal Justice

The integration of AI in criminal justice systems has sparked intense debate about fairness, accountability, and human rights. Law enforcement agencies increasingly rely on AI-powered tools for predictive policing, facial recognition, and risk assessment, raising significant ethical concerns about bias and privacy.

Predictive policing algorithms analyze historical crime data to forecast potential criminal activities in specific areas. However, these systems often perpetuate existing racial and socioeconomic biases present in historical data. When police departments disproportionately patrol predicted high-crime areas, it can create a self-fulfilling prophecy that reinforces discriminatory practices.

Facial recognition technology in law enforcement has faced particularly sharp criticism. While it promises to help identify suspects quickly, studies have shown higher error rates for minorities and women, leading to wrongful arrests and violations of civil liberties. Several cities have banned its use by law enforcement, highlighting the growing awareness of these ethical challenges.

Perhaps most controversial is the use of AI in sentencing decisions. Risk assessment algorithms that predict a defendant’s likelihood of reoffending are increasingly influencing judicial decisions. Critics argue these systems lack transparency and may discriminate against certain demographics based on factors beyond their control, such as zip code or family history.

The implementation of AI in criminal justice also raises questions about human oversight and accountability. When an AI system makes a mistake or exhibits bias, determining responsibility becomes complex. Should it fall on the developers, the agencies implementing the technology, or the officials making final decisions?

To address these concerns, experts advocate for:

– Regular audits of AI systems for bias

– Transparent documentation of algorithmic decision-making processes

– Meaningful human oversight in critical decisions

– Community involvement in implementing AI tools

– Clear accountability frameworks

As AI continues to reshape criminal justice, finding the right balance between technological advancement and ethical considerations remains crucial for maintaining public trust and ensuring fair treatment under the law.

As we reflect on the complex landscape of AI ethics, it’s clear that we stand at a crucial intersection of technological advancement and moral responsibility. The challenges we’ve explored – from algorithmic bias and privacy concerns to transparency issues and the impact on employment – represent just the beginning of our ethical journey with artificial intelligence.

The rapid pace of AI development continues to present new ethical dilemmas that require thoughtful consideration and proactive solutions. As AI systems become more sophisticated and integrated into our daily lives, we must remain vigilant in addressing both current and emerging ethical concerns. The balance between innovation and responsible development will become increasingly critical in the years ahead.

Looking toward the future, several key areas demand our attention. First, the need for inclusive AI development that considers diverse perspectives and experiences will only grow in importance. Second, the establishment of robust governance frameworks that can adapt to evolving technology while protecting human rights and dignity remains crucial. Third, the ongoing challenge of maintaining human agency and oversight in increasingly autonomous systems will require careful navigation.

The global nature of AI technology means that ethical considerations must transcend national boundaries and cultural differences. International cooperation and dialogue will be essential in developing universal ethical standards while respecting local values and contexts. This includes addressing the digital divide and ensuring that AI benefits are distributed equitably across society.

We must also acknowledge that ethical AI development is not just a technical challenge but a deeply human one. It requires ongoing collaboration between technologists, ethicists, policymakers, and the public. Education and awareness about AI ethics should extend beyond technical experts to include all stakeholders affected by these technologies.

As we move forward, flexibility and adaptability in our ethical frameworks will be crucial. What seems ethically sound today may need revision as AI capabilities expand and new challenges emerge. Regular reassessment of our ethical guidelines and their practical implementation will help ensure they remain relevant and effective.

The future of AI ethics will likely bring unexpected challenges, but our current understanding and frameworks provide a strong foundation for addressing them. By maintaining open dialogue, promoting transparency, and prioritizing human values, we can work toward an AI-enabled future that benefits all of humanity while minimizing potential harms.