In 2016, Cambridge Analytica harvested data from 87 million Facebook users to influence electoral outcomes across multiple democracies. By 2024, AI-generated deepfakes of political candidates garnered millions of views within hours, blurring the line between truth and manipulation. These aren’t dystopian predictions—they’re documented events that expose how artificial intelligence is reshaping the foundations of democratic participation.

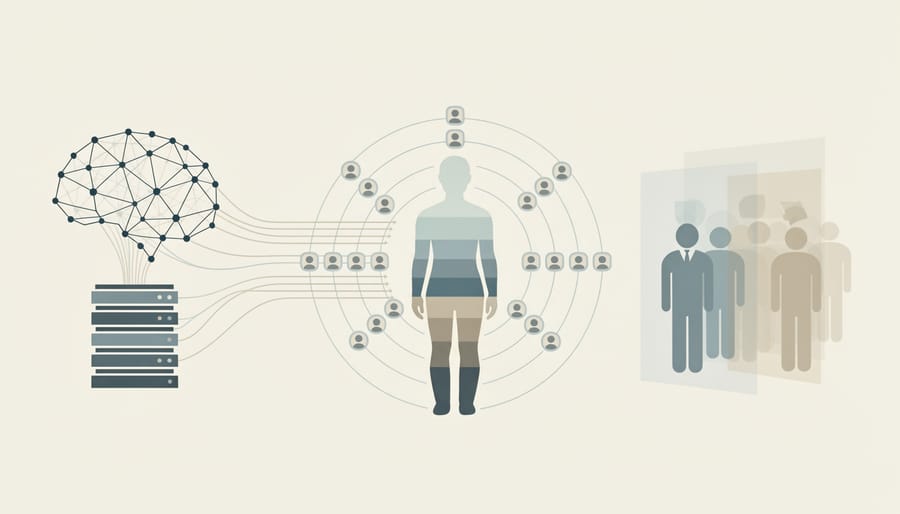

The intersection of AI and democracy presents a paradox. The same technologies that can increase voter accessibility and streamline election administration can also enable unprecedented manipulation, surveillance, and disinformation. Algorithmic systems now determine which political messages reach which voters, often amplifying divisive content because controversy drives engagement. Facial recognition technologies deployed at polling stations raise urgent questions about voter privacy and intimidation. Meanwhile, automated bots flood social media with coordinated messaging designed to polarize communities and suppress voter turnout.

For concerned citizens, students, and professionals navigating this landscape, understanding these ethical challenges isn’t optional—it’s essential for protecting democratic institutions. The stakes extend beyond theoretical debates. When AI systems lack transparency, accountability becomes impossible. When recommendation algorithms prioritize profit over public good, they transform social platforms into vehicles for radicalization. When deepfake technology becomes accessible to anyone with a laptop, distinguishing authentic political discourse from fabricated propaganda becomes nearly impossible.

This article examines the specific ethical dilemmas AI creates for democratic processes, from microtargeted disinformation campaigns to algorithmic bias in voter registration systems. More importantly, it provides practical frameworks for recognizing these threats and actionable solutions that technologists, policymakers, and everyday citizens can implement to safeguard electoral integrity in an AI-saturated world.

How AI Is Already Shaping Our Elections

Micro-Targeting and Voter Manipulation

AI-powered micro-targeting has transformed political campaigns by analyzing vast amounts of voter data to deliver personalized messages that resonate with individual beliefs and fears. Think of it as hyper-specific advertising, but for your vote.

Here’s how it works: Campaign teams collect data from social media activity, browsing history, purchase records, and public voter databases. AI algorithms then process this information to create detailed psychological profiles, predicting not just your political leanings, but which specific issues or emotional triggers will influence your decision.

During the 2016 U.S. presidential election, Cambridge Analytica used data from millions of Facebook users to craft personalized political advertisements. The system identified voters who were persuadable on specific issues—like gun rights or immigration—and delivered carefully tailored messages to each person. One voter might see content emphasizing economic concerns, while their neighbor received messages focused on national security, even though both ads supported the same candidate.

The 2020 elections saw even more sophisticated approaches. Campaigns used AI to determine the optimal time to send messages, which messenger would be most trusted, and even which facial expressions in candidate photos would generate the strongest response from specific demographic groups.

This technology extends beyond elections into everyday governance, connecting closely with AI surveillance practices that monitor citizen behavior. The ethical concerns mirror real-world examples of bias in other AI systems, where algorithms can reinforce existing prejudices while manipulating democratic participation through invisible, personalized influence campaigns.

Deepfakes and Synthetic Media

Imagine watching a video of a presidential candidate confessing to a crime they never committed, or hearing an audio clip of a world leader declaring war—except none of it actually happened. This is the reality of deepfakes, AI-generated synthetic media that can convincingly fabricate videos, images, and audio recordings of real people.

In 2018, a deepfake video of Gabonese President Ali Bongo sparked a failed military coup when citizens questioned whether their leader was actually alive or if the government was using fake footage. More recently, a deepfake audio recording of a Slovakian liberal party leader seemingly discussing election rigging went viral just days before their 2023 parliamentary elections, potentially swaying voter opinions with fabricated evidence.

These technologies use machine learning algorithms called generative adversarial networks to analyze thousands of images and audio samples of a target person, then generate new content that mimics their appearance, voice, and mannerisms with startling accuracy. What once required Hollywood-level resources can now be created with free software and a decent laptop.

The implications for democracy are profound. Voters may struggle to distinguish authentic content from manufactured lies, especially when deepfakes circulate on social media where fact-checking lags behind viral spread. A well-timed deepfake released just before an election can cause irreversible damage before verification is possible.

This erosion of trust extends beyond individual incidents. When anyone can plausibly deny authentic footage as “just another deepfake,” legitimate evidence of wrongdoing loses its power—a phenomenon researchers call the “liar’s dividend.”

The Ethical Dilemmas We Can’t Ignore

Transparency vs. Effectiveness

AI systems face a fundamental trade-off in political applications: the more transparent we make them, the less effective they might become. This creates a genuine dilemma for democracy.

Consider election prediction models. Simple, explainable algorithms allow citizens to understand exactly how forecasts are generated, building public trust. However, these transparent systems often can’t match the accuracy of complex neural networks that process thousands of variables in ways even their creators struggle to explain. When a sophisticated AI predicts election interference or identifies disinformation campaigns, demanding full transparency might reveal vulnerabilities that bad actors could exploit.

This tension becomes critical when AI systems influence voter information or campaign strategies. A recommendation algorithm that determines which political content people see should ideally be understandable to the public. Yet oversimplifying these systems might reduce their ability to detect nuanced manipulation tactics or provide personalized civic information effectively.

The path forward requires balance rather than absolutes. Election officials and tech companies can provide “tiered transparency,” offering general explanations for public consumption while allowing independent auditors access to deeper technical details. Real-world examples show this works: several European countries now require social media platforms to explain their content prioritization in accessible terms without compromising their security features.

Privacy in the Age of Voter Profiling

Modern political campaigns have become data goldmines, collecting everything from your browsing history to your social media likes to predict how you’ll vote. While this targeted approach makes campaigns more efficient, it raises serious questions about where to draw the line between persuasion and invasion of privacy.

Think about it: when a campaign knows your income, healthcare concerns, religious views, and which websites you visit, they can craft messages that push your exact emotional buttons. A 2016 study revealed that campaigns were micro-targeting voters with thousands of different ad variations based on psychological profiles. This goes far beyond traditional campaign strategies.

The core ethical dilemma centers on consent and transparency. Most voters don’t realize how much data they’re sharing or how it’s being used to influence their political choices. Data brokers collect information from countless sources—loyalty cards, public records, online activity—creating detailed profiles without explicit permission. This practice blurs the boundaries between legitimate persuasion and manipulation.

Protecting voter privacy and security requires stronger regulations around data collection, clear opt-in requirements, and transparency about how campaigns use personal information. Voters deserve to know when they’re being targeted and based on what data, empowering them to make truly informed decisions rather than being unknowingly nudged toward predetermined outcomes.

Fairness and Algorithmic Bias

AI systems learn from historical data, and when that data reflects societal prejudices, the technology can perpetuate discrimination at scale. In electoral contexts, algorithmic bias poses serious risks to fair representation and equal participation.

Consider voter targeting systems that use AI to identify likely voters. If trained on data showing lower turnout in minority neighborhoods, these systems might deprioritize outreach to those communities, creating a self-fulfilling prophecy. Similarly, AI-powered voter registration tools have sometimes displayed higher error rates when processing names common in specific ethnic groups, effectively creating modern barriers to participation.

Facial recognition technology used at polling stations has demonstrated significantly higher error rates for people with darker skin tones, potentially leading to wrongful challenges or delays. Meanwhile, content moderation algorithms on social platforms may disproportionately flag or remove posts from marginalized communities discussing their voting experiences, silencing important voices in democratic discourse.

The challenge intensifies because these biases often operate invisibly within complex systems, making them difficult to detect and correct without deliberate auditing and diverse development teams committed to fairness testing.

The Erosion of Informed Consent

When you scroll through your social media feed or search for political information online, you’re making decisions based on what you see. But here’s the uncomfortable question: do you actually know how AI shaped that information before it reached you?

Think of informed consent in healthcare—doctors must explain risks, benefits, and alternatives before you agree to treatment. Now imagine if those decisions happened invisibly, without your knowledge. That’s essentially what’s occurring in our digital democracy.

Most voters don’t realize that AI algorithms curate their news feeds, determine which political ads they see, or even predict their voting likelihood. A recent study found that less than 30% of social media users understand that machine learning systems personalize their content. Even fewer know that campaigns use AI to microtarget messages designed to trigger specific emotional responses.

The issue goes deeper than simple awareness. Even when platforms disclose AI use in their terms of service, these explanations are buried in lengthy legal documents written in technical language. It’s like getting consent for surgery by handing someone a 50-page medical textbook.

Without genuine understanding of how AI influences their information environment, voters can’t meaningfully consent to this digital manipulation—or make truly informed choices about their democratic participation.

Who Guards the Guardians? The Accountability Problem

The Tech Companies’ Role

Tech companies building AI systems and social media platforms occupy a unique position of responsibility in protecting democratic processes. These organizations control the algorithms that determine what billions of users see, making them inadvertent gatekeepers of political information.

Consider Facebook’s 2016 experience with election interference. The platform became a conduit for misinformation campaigns, revealing how unprepared tech companies were for such abuse. This wake-up call prompted significant changes across the industry.

Today, responsible tech companies are implementing several protective measures. They’re developing AI systems specifically designed to detect manipulated media, including deepfakes that could mislead voters about candidate statements or actions. Transparency initiatives now require political ads to be clearly labeled, with information about who paid for them and who they’re targeting.

Leading platforms have also established partnerships with independent fact-checkers and election integrity organizations. When potentially false claims gain traction, these systems flag content and provide users with verified information from credible sources.

However, challenges remain. The speed at which AI technology evolves often outpaces safety measures. Companies must balance free speech concerns with the need to combat misinformation, a delicate line that requires ongoing refinement.

For these efforts to succeed, tech companies need adequate resources dedicated to trust and safety teams, particularly during election periods when threats intensify. The commitment must extend beyond mere compliance to genuine ethical responsibility.

Government Oversight Challenges

Traditional regulatory frameworks face significant hurdles when attempting to oversee AI in elections. The primary challenge? Speed. While technology companies deploy new AI capabilities in months or even weeks, government bodies often require years to research, draft, and implement legislation. By the time a regulation passes, the technology has already evolved beyond what the law addresses.

Consider deepfake technology. When lawmakers first recognized the threat of AI-generated fake videos in 2018, the technology was relatively crude and easy to detect. By the time most jurisdictions enacted deepfake laws in 2021-2023, the technology had advanced dramatically, making detection increasingly difficult and rendering some legislative provisions ineffective.

Another obstacle is the technical expertise gap. Most regulatory bodies lack staff with deep AI knowledge, making it challenging to craft informed, effective policies. How can lawmakers regulate facial recognition in voter identification systems if they don’t understand how the algorithms work or what biases they might contain?

Jurisdictional issues further complicate oversight. AI systems operate across borders, but election regulations remain firmly national or local. A social media platform using AI recommendation algorithms affects voters in dozens of countries simultaneously, yet each nation must craft its own response. This fragmentation allows companies to exploit regulatory arbitrage, housing AI operations in locations with minimal oversight while their influence extends globally.

Practical Solutions Already Making a Difference

AI Detection Tools and Fact-Checking Systems

As AI-generated content becomes increasingly sophisticated, several detection tools have emerged to help identify synthetic text, images, and videos. OpenAI’s AI Text Classifier and GPTZero are among the most popular text detection tools, analyzing writing patterns and inconsistencies that suggest machine authorship. For images and deepfakes, platforms like Sensity AI and Microsoft’s Video Authenticator examine digital fingerprints and pixel-level anomalies that human eyes often miss.

Fact-checking systems have also evolved to combat AI-fueled misinformation. Organizations like Full Fact and ClaimBuster use automated verification to cross-reference claims against trusted databases in real-time. Social media platforms now employ combinations of human reviewers and AI algorithms to flag suspicious content during election periods.

However, these tools face significant limitations. Detection accuracy varies widely, often producing false positives that flag legitimate human writing or missing sophisticated AI content entirely. The technology resembles an arms race—as detection methods improve, so do generation techniques. For concerned citizens and professionals, the key takeaway is clear: while these tools provide valuable assistance, they should complement, not replace, critical thinking and media literacy skills when evaluating information online.

Transparency Requirements and Disclosure Laws

Governments worldwide are introducing laws requiring campaigns and platforms to label AI-generated political content, creating a new standard for digital transparency. These regulations aim to help voters distinguish between human-created messages and automated content, addressing concerns about manipulation and authenticity in democratic processes.

The European Union leads with comprehensive legislation through its AI Act, which mandates clear labeling of deepfakes and synthetic media used in political contexts. When a campaign deploys AI-generated videos or images, they must include visible disclosures that average citizens can easily understand. This approach treats transparency as a fundamental right rather than an optional courtesy.

In the United States, several states have implemented their own disclosure requirements. California’s AB 2839, enacted in 2024, requires political advertisements using AI-generated content to include prominent disclaimers. Meanwhile, Washington state mandates campaigns report their use of AI tools in voter outreach efforts, creating public records that journalists and watchdog groups can monitor.

Australia has taken a different path, focusing on platform accountability. Their regulations require social media companies to flag automated political content and provide users with information about the AI systems behind it. This shifts responsibility from individual campaigns to the tech giants hosting the content, ensuring broader coverage and more consistent enforcement across the digital landscape.

Ethical AI Development Frameworks

Organizations worldwide are developing practical guidelines to keep AI development accountable and aligned with democratic values. The European Union’s AI Act, for instance, classifies AI systems by risk level and imposes strict requirements on high-risk applications like those used in voting systems or political advertising. This creates clear rules that developers must follow before deploying AI in sensitive democratic contexts.

Major tech companies have also adopted ethical AI frameworks that prioritize transparency, fairness, and human oversight. Microsoft’s Responsible AI Standard, for example, requires teams to document their AI systems’ potential impacts on democracy and implement safeguards against manipulation. Similarly, Google’s AI Principles explicitly prohibit creating technologies that could undermine democratic processes.

On the ground, these frameworks translate into concrete practices. Election technology providers now conduct third-party audits of their algorithms to verify they don’t introduce bias. Social media platforms have established content authenticity programs that label AI-generated political content, helping voters distinguish between human-created and synthetic media.

The IEEE has published standards for algorithmic bias specifications, giving developers measurable criteria to assess their systems. These industry-wide standards create accountability mechanisms that help ensure AI serves democracy rather than undermining it, transforming abstract ethical principles into actionable technical requirements.

What You Can Do to Protect Democratic Integrity

Spotting AI-Generated Content

Detecting AI-generated content doesn’t require advanced technical skills. Start by examining facial movements in videos closely. Deepfakes often struggle with natural blinking patterns, lip synchronization, and consistent lighting across the face. Pay attention to the edges where hair meets the background, as these areas frequently show blurring or distortion.

For images, look for oddities in reflections, shadows, and symmetry. AI-generated faces sometimes display unnatural teeth patterns or earrings that don’t match. Hands remain particularly challenging for AI systems to render convincingly, so check for extra fingers or awkward positioning.

Several free tools can help verify content authenticity. Microsoft’s Video Authenticator and Sensity’s detection platform analyze videos for manipulation signs. For images, tools like FotoForensics reveal inconsistencies in compression levels that suggest editing.

When evaluating text content, watch for repetitive phrasing, unusually perfect grammar, or generic statements lacking specific details. AI-generated writing often sounds plausible but lacks the nuanced understanding humans bring to complex topics.

Remember that no single red flag confirms fakery. Cross-reference suspicious content with trusted news sources, check when and where it first appeared online, and consider the context. If something seems designed to provoke strong emotions, take extra time to verify its authenticity before sharing.

Supporting Ethical AI Policies

You don’t need to be a policy expert to make a difference in shaping how AI is used in elections. Start by staying informed about AI-related legislation in your area. Many regions are developing frameworks to regulate political deepfakes, algorithmic transparency, and data privacy. Follow these developments and contact your representatives to voice your concerns about AI misuse in democratic processes.

Support organizations working on digital rights and electoral integrity. Groups focused on technology ethics often provide resources to help citizens understand AI’s impact and offer opportunities to participate in advocacy campaigns. Even sharing verified information about AI manipulation tactics with your community helps build collective awareness.

Consider participating in public consultations when governments or tech companies seek feedback on AI policies. Your perspective as a voter matters in these discussions. Universities and civic organizations frequently host forums on AI ethics where you can learn from experts and contribute your voice.

Think of advocacy as a marathon, not a sprint. Small actions compound over time. Whether it’s signing petitions for transparent AI use, attending town halls, or simply having informed conversations with friends and family, each step strengthens democratic safeguards. By engaging actively, you help ensure AI serves democracy rather than undermines it.

As we navigate the complex intersection of artificial intelligence and democratic governance, one truth becomes clear: there are no simple answers, only ongoing commitments. The ethical challenges we’ve explored—from algorithmic bias in voter targeting to deepfakes threatening election integrity—demand more than one-time fixes. They require continuous vigilance, adaptive regulations, and collective responsibility from technologists, policymakers, and citizens alike.

The path forward isn’t about choosing between technological progress and democratic values. Instead, it’s about weaving these threads together thoughtfully. We’ve seen how AI literacy programs can empower voters to recognize manipulation, how transparency requirements can hold platforms accountable, and how diverse teams can build fairer algorithms. These solutions share a common denominator: they recognize that democracy thrives on informed participation, not passive consumption.

Looking ahead, the question isn’t whether AI will play a role in our elections—it already does. The real question is whether we’ll shape that role deliberately or allow it to evolve unchecked. This requires each of us to stay informed about emerging technologies, advocate for ethical AI practices, and remain skeptical of information that seems designed to provoke rather than inform.

The future of democratic AI isn’t predetermined. It’s being written right now through the choices we make—in the code we write, the policies we support, and the standards we demand. By embracing both innovation and ethical responsibility, we can ensure that technology strengthens rather than undermines the democratic principles we cherish.